aorus 3080 lcd screen quotation

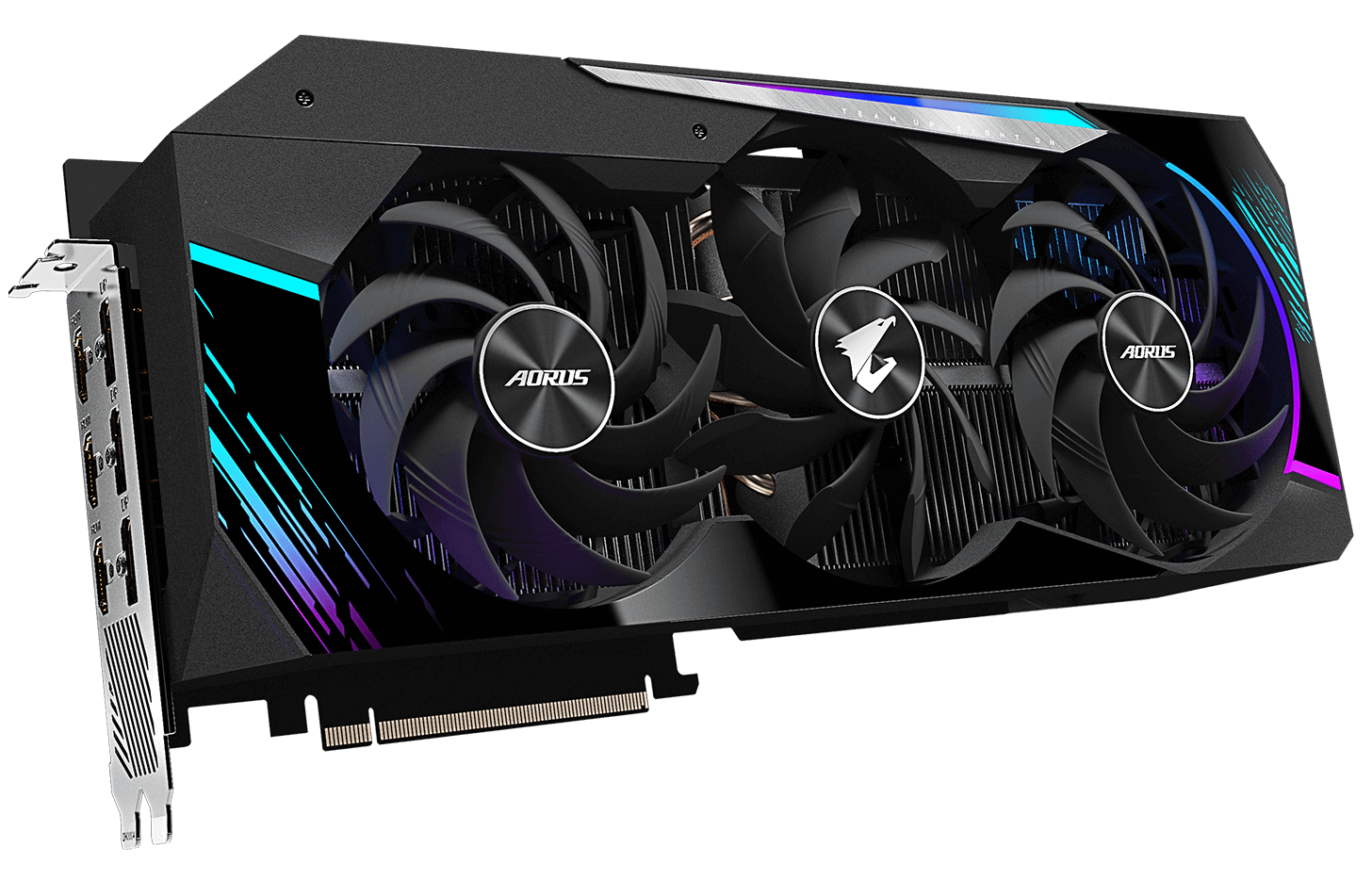

AORUS - the premium gaming brand from GIGABYTE had launched a completely new series of RTX 30 graphics cards, including RTX 3090 Xtreme, RTX 3090 Master, RTX 3080 Xtreme, and RTX 3080 Master.

Besides excellent cooling and superior performance, LCD Edge View is another spotlight of AORUS RTX 30 series graphics cards. LCD Edge View is a small LCD located on the top of the graphics card. What could users do with this small LCD? Let’s find it out.

LCD Edge View is a LCD located on the graphics card, you can use it todisplay GPU info including temperature, usage, clock speed, fan speed, VRAM usage, VRAM clock and total card power. All this information can be shown one by one or just certain ones on the LCD.

Besides that, there are three different displaying styles available and users could choose their ideal one. However, not just GPU info but FPS (Frame Per Second) in the game or other application could be displayed through LCD Edge View.

The LCD Edge View can also show customized content including text, pictures or even short GIF animations.Users could input the preferred text to the LCD, also set the font size, bold or italic. It also supports multi-language so users could input whatever type of text they want.

About the picture, LCD Edge View allows users to upload a JPEG file to it and AORUS RGB Fusion software will let users choose which region of the picture should be shown. The support of short GIF animations is the most interesting part.

Users can upload a short animation in terms of GIF to be shown on the LCD so they can easily build up a graphics card with their own style. All of the customizations above can be done via AORUS RGB Fusion software.

There’s something more interesting with LCD Edge View: The little CHIBI.CHIBI is a little falcon digitally living in the LCD Edge View and will grow up as more time users spend with their graphics card. Users could always check their little CHIBI through the LCD Edge View and watch it eat, sleep or fly around, which is quite interactive and interesting.

In conclusion, LCD Edge View can display a series of useful GPU information, customized text, pictures, and animations, allowing users to build up the graphics card with their own style. Users can also have more interaction with their card via the little CHIBI, the exclusive little digital falcon living inside the LCD Edge View, which brings more fun while playing with the graphics card.

With the AORUS XTREME, Gigabyte brings a mightily impressive product to the market. Its factory tweaked deeply, comes with extended power utilization, and a cooler as thick as a brick. Next to that ethically it is a very pleasing product, with very subtle RGB elements and of course that LCD info/anim screen. Now, you"d think that with the extra power available, the beefed-up VRM, and the increased Boost clock frequency, that this card would be tremendously faster over reference. Ehm no, we stated this many times already, boost frequency matter less these days as the power limiter dumbs down that performance the second your max wattage has been reached. And for this product set in performance mode, that means 4% to 5% additional performance out of the box seen from reference.

Our performance paragraph is a generic paragraph used on all RTX 3080 reviews as the performance is more or less the same for all cards and brands. Gaming it can do well, with exceptional values. Yes, at Full HD, you"ll be quite often bottlenecked and CLU limited. But even there, in some games with proper programming and that right API (DX12/ASYNC), the sheer increase in performance is staggering. The good old rasterizer engine first, as hey, it is still the leading factor. Pure speaking from a shading/rasterizing point of view, you"re looking at 125% to 160% performance increases seen (relative) from the similar priced GeForce RTX 2080 (SUPER), so that is a tremendous step. The unimaginable number of shader processors is staggering. The new FP32/INT32 combo clusters remain a compromise that will work exceptionally well in most use cases, but not all of them. But even then, there are so many shader cores that not once the tested graphics card was slower than an RTX 2080 Ti; in fact (and I do mean in GPU bound situations), the RTX 2080 stays ahead by at least a margin of a relative 125%, bot more often 150% and even 160%. Performance-wise we can finally say, hey, this is a true Ultra HD capable graphics card (aside from Flight Simulator 2020, haha, that title needs D3D12/AYSNC en some DLSS!). The good news is that any game that uses traditional rendering will run excellent at 3840x2160. Games that can ray trace and manage DLSS also become playable in UHD. A good example was battlefield V with Raytracing and DLSS enabled, in Ultra HD now running in that 75 FPS bracket. Well, you"ve seen the numbers in the review; I"ll mute now. DXR Raytracing and tensor performance, the RTX 30 series have been received new tensor and RT cores. So don"t let the RT and Tensor core count confuse you. They"re located close inside that rendering engine, became more efficient, and that shows.

If we look at an RTX 2080 with port Royale, we will hit almost 30 FPS. The RTX 3080 nearly doubles that at 53 FPS. Tensor cores are harder to measure, but overall from what we have seen, it"s all in good balance. Overall though, the GeForce RTX 3080 starts to make sense at a Quad HD resolution (2560x1440), but again I deem this to be an Ultra HD targeted product. In contrast, for 2560x1440, I"d see the GeForce RTX 3070 see playing a more important role in terms of sense and value for money. At Full HD, then the inevitable GeForce RTX 3060, whenever that may be released. Games like Red Dead Redemption will make you aim, shoot, and smile at 70 FPS in UHD resolutions with the very best graphics settings. As always comparing apples and oranges, the performance results vary here and there as each architecture offers advantages and disadvantages in certain game render workloads. So, for the content creators among us, have you seen the Blender and V_Ray NEXT results? No, go towards page 30 of this review, and your eyes will pop out. The sheer compute performance has early exponentially doubled one step in the right direction. We need to stop for a second and talk VRAM, aka framebuffer memory. The GeForce RTX was fitted with new GDDR6X memory, it clocks in at 19 Gbps, and that is a freakfest of memory bandwidth, which the graphics card really likes. You"ll get 10GB of it. I can also tell you that there are plans for a 20GB version. We think initially the 20GB was to be released as the default, but for reasons none other than the bill of materials used, it became 10GB. In the year 2020, that is a very decent amount of graphics memory. However, signals are that the 20GB version may become available later for those who want to run Flight Simulator 2020; haha, that was a pun, sorry. We feel 10GB right now is fine, but with DirectX Ultimate and added scene complexity and raytracing becoming the new norm, I do not know if that"s still enough two years from now.

The power draw under intensive gaming for GeForce RTX 3080 remains to be significant. We measured it to be close to the 400 Watt at its peak, and for typical power draw under load that value is roughly 375 Watt. That is steep for an RTX 3080 rated at 320W at defaults. IDLE power consumption also was high at 28W, we suspect that the RGB and LCD setup are responsible for this. We advise a 750 Watt model at a minimum as the rest of the system needs some juice, and you will want some reserve.

This GeForce RTX 3080 did hardly exhibit coil squeak, much less than the founder card we tested. Is it disturbing? Well, no, it"s at a level you can hear it softly if you put your ear next to the card. In a closed chassis, however, that noise would fade away in the background. However, with an open chassis, you can hear a bit of coil whine/squeak.

The AIB product is deemed and damned to be called the more premium products. And I already told you, that"s no longer the case anymore as NVIDA"s founder cards are directly competing with the AIB product. In a perfect scenario, I would like to see the AIB product cheaper than the founder edition. That"s not the case. This card will be more expensive seen over that founder edition card. The price is currently rated at 1350 EUR incl vat (in the Netherlands). This will vary per country and, of course, availability. It is incredibly expensive for an RTX 3080, if you can find one to purchase at all.

The card actually tweaks well for an RTX 3080. Gigabyte had already maxed out the power limiter for you, then add ~100 MHz on the GPU clock resulting in observed boost frequencies towards 2100 MHz (depends and varies per game title/application). Remember that on the SILENT mode BIOS you could go even a bit higher. The memory was binned as well; we reached a beautiful 21 GHz. All in all, that brings us a very healthy 8% performance premium seen from the reference model.

Gigabyte offers a gorgeous looking product with the AORUS XTREME, really nice. Though powered down it"s a bit of a big brick to look at, but when you turn on that PC of yours, everything comes together. Gigabyte did things right when it comes to the factory tweak, I mean 1905 MHz is the highest clocked value next to MSI SUPRIM that we have seen. There"s no room left on the power limiter either, they opened it up completely at defaults for you. The product comes with dual-BIOS and for good reason, we feel that the performance mode measured at 42 DBA is a bit too loud for a product in this category and price range. At the cost of very little performance, you can bring that back to roughly 38 DBa under gaming load with the silent BIOS mode. The truth be told though that I did expect better value with this ginormous cooler. The two-outer fans spin clockwise, the smaller middle one any clockwise "to prevent turbulence", but it is exactly that middle fan where the noise is coming from as when I slow it down with my finger, the card becomes silent. Gigabyte really should look into their own thesis. Lovely is the RGB setup, and beautiful is the little LCD screen that can display a whole lot of things. You will need to activate it with Gigabytes software suite though.So all the extra"s like the newly defined looks, backplate, LCD, cooler, and dual BIOS, is it worth a price premium? We doubt that a little. But it is over-engineering at its best. Nvidia"s project green light defines that all cards are more or less in that same performance bracket, and that results in a meager 3~4% additional performance seen over the FE edition, that rule of thumb goes for all amped and beefed up products. Make no mistake, it"s love and fantastic, but is it worth the price premium? We doubt that. Gigabytes challenge is the DBA values, they preferred temperature of 65<>0 Degrees C over acoustics. I think I would have been fine with say 75 Degrees C and slightly lower acoustics. But that is a dilemma based on a personal and thus more subjective note. We can only acknowledge that the sheer performance this card series brings to the table is anything short of being impressive. The new generational architecture tweaks for Raytracing and Tensor also is significant. Coming from the RTX 2080, the RTX 3080 exhibited a roughly 85% performance increase, and that is going to bring hybrid raytracing towards higher resolutions. DXR will remain to be massively demanding, of course, but when you can plat Battlefield V in Ultra HD with Raytracing and DLSS enabled at over 70 FPS, hey, I"m cool with that. This card, in its default configuration, sits roughly 4% above founder edition performance. Of course, pricing will be everything as the AIB/AIC partners need to complete with an excellent founder edition product. Gigabyte did a marvelous job with the AORUS XTREME, but in the end, that choice rests at the end-user level availability and pricing. It"s over-engineered in all its ways but granted, we do like that. This has to be a top pick.

Unfortunately I can"t change the resolution of my Mac anywhere and when I go into a game it lags like crazy. When I click on Nvidia GPU activity, I see applications that are supported, but no games. I also see there that no display is connected. In the "manual" it is pointed out that I can check whether a game is really processed by the 3080.

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey