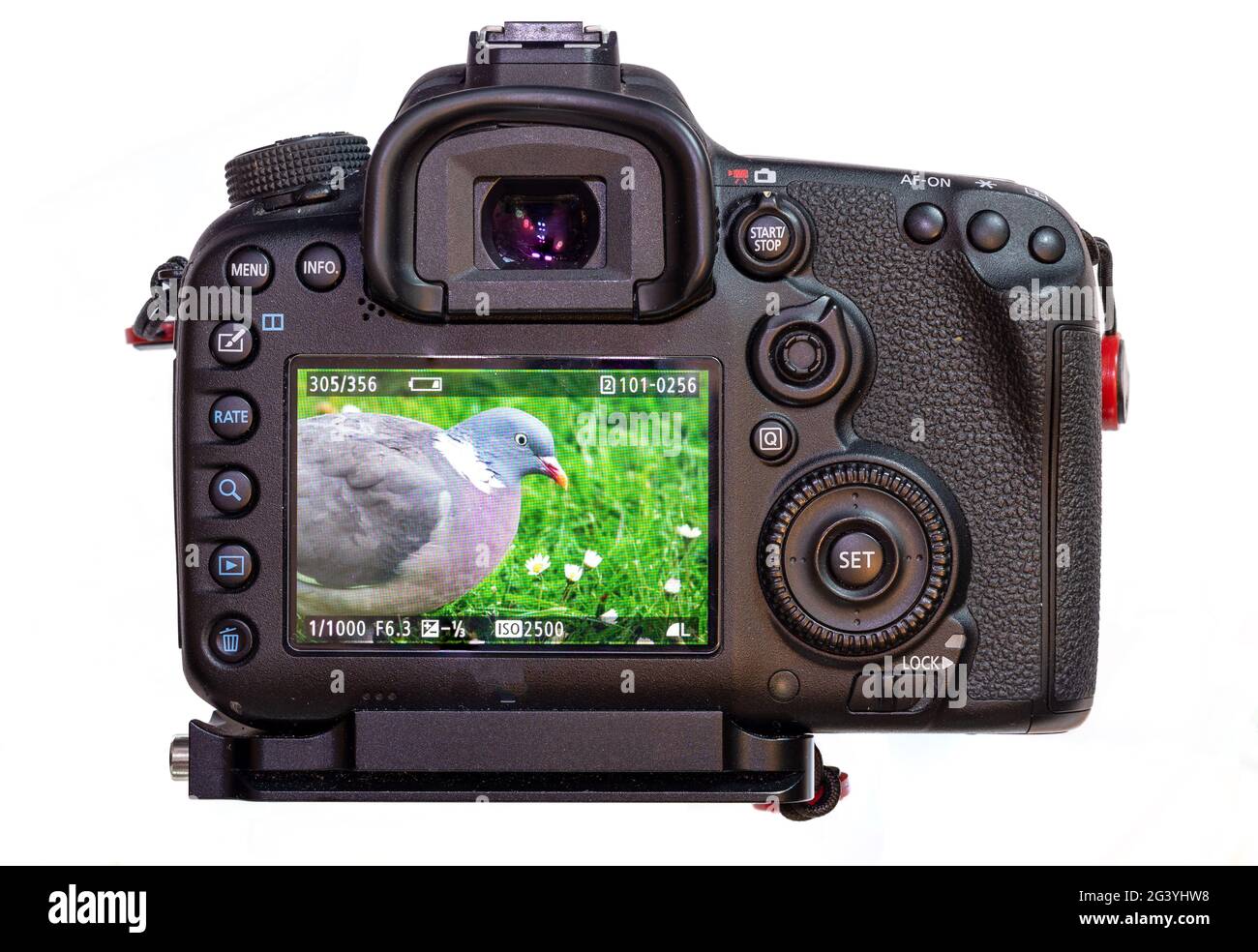

camera behind lcd screen in stock

For decades, we’ve lived with an inconvenient technological truth: Cameras and other sensors cannot occupy the same space as our screens. It’s why, increasingly, smartphones rely on the dreaded “notch” as a way of maximizing screen-to-body ratios while preserving the front-facing camera and other sensors.

Some phone makers, from Oppo to OnePlus, get around this problem by using motorized pop-up cameras, while others have resorted to punching holes in displays to provide the camera with its own peephole. It’s also why even the latest high-end laptops still have pronounced bezels around their displays. The webcam needs a home and it seems no one is willing to live with a notch or hole-punch on a computer.

But it turns out that cameras and screens aren’t quite as incompatible as they seem. Thanks to improvements in manufacturing techniques, these two adversaries are about to end their long-standing territorial dispute. This isn’t a far-flung prediction; it’s happening right now.

Complaining about a phone notch, hole-punch or a large screen bezel is the very definition of a first-world problem. And judging from Apple’s stellar sales numbers, none of these side effects of forward-facing cameras are dealbreakers for buyers.

First, it lets you make phones that have true edge-to-edge screens. Videos and photos look better, and app developers can make use of every square millimeter for their designs — all while keeping the phone’s body as small as possible.

Second, from a design and manufacturing point of view, if cameras and sensors can be placed anywhere, with fewer restrictions on their size and visibility, it redraws the map for phone design. Bigger batteries, thinner phones, more sensors, and much better cameras are all potential upsides.

Cameras placed in bezels or notches create the now all-too-familiar, awkward downward gaze that happens during video calls. “Most of the time, you’re not actually looking at each other when you’re talking over video chat,” Michael Helander, CEO at Toronto-based OTI Lumionics told Digital Trends. “The current placement of videoconferencing cameras in all of these devices is really suboptimal.”

Helander has probably thought about this problem more than most. His company creates specialty materials that enable what was once impossible — making displays transparent enough that you can place a camera behind them.

Once a camera is sitting behind the display, it will finally make our video interactions look and feel like real, in-person interactions — a game changer that couldn’t come at a better time in our COVID-restricted world.

Screen technology is dominated by two kinds of displays. The most common are liquid crystal displays (LCD), which include LED TVs and QLED TVs. The second, organic light-emitting diode (OLED), dominates smartphones and tablets, and is growing in use in laptops and even desktop monitors

LCDs are actually transparent when not in use — that’s why you see a gray background on a calculator screen wherever the black digit segments aren’t active. But taking advantage of this transparency to take a photo poses big technical hurdles, especially once you factor in the need for a backlight.

One solution favored by Xiaomi and Oppo in their UDC prototypes is to rely on an OLED pixel’s inherent transparency. When an OLED pixel isn’t being used to emit light, it lets light in. So you can place a camera behind an OLED display and it will be able to gather enough light to capture images. But there’s a catch: You still need to place the camera at the top or bottom of the screen, because when the camera is active, the OLED pixels above it must be shut off, which creates a temporary black area on the screen. That approach is a solution to the notch and hole-punch problem, but it does nothing to solve the downward gaze issue.

The first commercially available phone with an under-display camera — the ZTE Axon 20 5G — uses this technique, but it also suffers from a less-than-ideal compromise. Modern smartphones have incredibly densely packed pixels. The iPhone 12 Pro has a 460ppi (pixels per inch) display, which means that there are more than 200,000 pixels in one square inch. Sony’s Xperia XZ Premium had a whopping 807ppi screen (more than 650,000 pixels per square inch).

Punching holes in between those pixels, even with a laser, is so tricky that ZTE had to remove some pixels from the area above the camera to buy some extra room. The result is a noticeably lower-resolution square on the screen.

A lower-resolution section of the screen might not bother you when it’s near the top, in an area that’s used mostly for inconsequential information. But few people would accept such an obvious reduction of resolution in the center of their phone’s display, which is what we would need to counteract the downward-gaze problem.

Helander claims the self-assembly process works on any screen size, and lets manufacturers decide how many openings are needed — from just one to 1 billion.

As exciting as it is to think that we’ll soon be able to have much more natural video calls, placing a camera under a display puts an even bigger onus on manufacturers to provide trustworthy privacy measures.

We’ll need some kind of reliable indicator of when the camera is active and an equally reliable way of disabling it. Because it’s under the screen, there’s no way to physically block the lens without blocking content on the screen as well.

Apple recently updated iOS to show a small green dot near the notch when its forward-facing camera is in use, and an orange dot to show when the mic is active. That’s a good way to inform us of what’s going on, but we need something more.

Smart speakers like the Google Nest mini ship with physical switches that can be used to disable the microphones. Assuming that there’s no way to remotely overcome the switch’s position, it provides a very good level of trust. A similar mechanism on TVs, monitors, and laptops should come standard once cameras become invisible.

OTI Lumionics already has agreements in place with several Chinese smartphone manufacturers, but due to confidentiality restrictions, these companies can’t be named just yet. “Many of them have prototype phones that have been built and everything looks great,” Helander notes, “but none of them want to disclose anything publicly until they’re ready for their actual official product announcements.” He’s confident that we’ll see these new under-display camera models sometime in 2021, although they may remain a Chinese market exclusive until 2022.

For years, smartphone manufacturers have tried numerous ways to create a truly edge-to-edge display. The ultimate aim is to have a smartphone with a screen that reaches to all four edges of the frame, with no interruption.

The only issue has been the need for a selfie camera. That inevitably has be be put somewhere, and we"ve seen any number of inventive methods that aim to try to hide it, make it less of an obstruction, or at least, reduce its visual impact.

There have been pop-up camera mechanisms, tiny dewdrop notches, flip cameras, and punch-hole cameras put on the front of phones. But there is one new technology aimed at hiding it completely: the under-display camera. Also known as USC (under-screen camera) or UPC (under-panel camera).

Thankfully, the clue is very clearly in the description. The UPC/USC or under display camera is a camera that"s hidden behind the display panel of the smartphone.

In basic terms, it"s similar to in-display optical fingerprint sensors. A small portion of the display panel is transparent, and lets light through to a camera that"s sat behind the display. Or to be more technically accurate, a small portion near the top of the screen is actually a second, tiny transparent display.

If you"re wondering why they can"t do what they do with optical fingerprint sensors and just make a transparent portion of the main screen yet, it"s because standard OLED panels aren"t yet able to let enough light through to the other side to create a decent coloured image. So for now, companies like ZTE and Xiaomi have resorted to using a secondary, much smaller "invisible" display within a display.

And, if they used this display as the entire panel, that would have dire consequences for the fidelity of the image on the display. So they put it in a part of the screen where - most of the time - the quality of the image doesn"t matter: in the status bar.

While the eventual aim is surely to have it implemented in a way that makes it completely invisible, early iterations haven"t quite managed it. It"s mostly invisible on darker days, but once you shine light on the area of the display hiding the camera, you can clearly see the area that"s allowing light through. As technology develops, we expect this to improve.

The first phone to have the under-screen camera was the ZTE Axon 20 5G. So far it"s also the only commercially available product with the under-display camera.

As mentioned already, part of the reason is that it"s not possible (yet) to completely hide that secondary transparent screen. The other problem is to do with image quality from the camera that"s behind it.

By adding a layer of material that"s not completely clear in front of a camera, it makes it harder to get a really good photo. After all, cameras require light to take pictures, but crucially, also need that light to come through to the sensor without any disturbance to the signal in order produce sharp and accurate results.

This is an extreme over-simplification, but it"s almost like covering the camera with a really thin layer of tracing paper and asking it to take as good a picture as if you hadn"t. It just can"t be done. Or hasn"t been so far.

The aim undoubtedly is to make the transparent display portion more transparent, but also develop better AI/algorithms to correct the issues that arise from filtering light through the screen.

The idea of embedding cameras in a display is not new. From the earliest days of videoconferencing it was recognized that the separation of the camera and the display meant the system could not convey gaze awareness

A second challenge has emerged more recently. The desire to maximize screen size on small devices such as cell phones leaves little room outside the display to locate a camera.

Placing cameras behind the screen could solve these problems, but doing so tends to degrade the image. Diffraction from the screen’s pixel structure can blur the image, reduce contrast, reduce usable light

In this project we investigate how machine learning can help overcome some of the image degradation problems associated with placing cameras behind the display, and can help frame remote conversations in

Locating the camera above the display results in a vantage point that’s different from a face-to-face conversation, especially with large displays, which can create a sense of looking down on the person speaking.

Worse, the distance between the camera and the display mean that the participants will not experience a sense of eye contact. If I look directly into your eyes on the screen, you will see me apparently gazing below

your face. Conversely, if I look directly into the camera to give you a sense that I am looking into your eyes, I’m no longer in fact able to see your eyes, and I may miss subtle non-verbal feedback cues.

Relocating the camera to the point on the screen where the remote participant’s face appears would achieve a natural perspective and a sense of eye contact.

With transparent OLED displays (T-OLED), we can position a camera behind the screen, potentially solving the perspective problem. But because the screen is not fully transparent, looking through it degrades image quality by introducing diffraction and noise.

To compensate for the image degradation inherent in photographing through a T-OLED screen, we used a U-Net neural-network structure that both improves the signal-to-noise ratio and de-blurs the image.

The ability to position cameras in the display and still maintain good image quality provides an effective solution to the perennial problems of gaze awareness and perspective.

Isolating the people from the background opens up additional options. You can screen out a background that is distracting or that contains sensitive information. You can also use the background region to

Human interaction in videoconferences can be made more natural by correcting gaze, scale, and position by using convolutional neural network segmentation together with cameras embedded in a partially transparent display. The diffraction and noise resulting from placing the camera behind the screen can be effectively removed using U-net neural network. Segmentation of live video also makes it possible to combine the speaker with a choice of background content.

As is often the case with new technology, under-display cameras didn’t make a great first impression. It’s a nice idea in theory, of course — you don’t need a notch or a hole-punch if you can put a selfie camera under the display — but the earliest efforts had some issues.

ZTE’s Axon 20 last year was the first phone to ship with one, and it was bad. The camera quality was incredibly poor, and the area of the screen looked more distracting than a notch. Samsung followed up this year with the Galaxy Z Fold 3, which had similar issues.

But things are actually getting better. Two newer phones on the market, Xiaomi’s Mix 4 and ZTE’s Axon 30, use a different approach to the technology, and it’s an improvement on the previous generation. Instead of having a lower resolution area of the screen that allows light through to the camera, they shrink the size of the pixels without reducing the number.

This means that the part of the screen that covers the camera is really difficult to see in normal use. Look at how the Axon 30 compares to the Axon 20 on a white background, which was the most challenging situation for the older phone to disguise the camera in. It’s also much harder to make out than the camera on Samsung’s Galaxy Z Fold 3:

ZTE’s Axon 20 on the left, and the newer Axon 30 on the right. The area of the screen that covers the selfie camera is much less noticeable on the Axon 30.

Now, the camera is clearly still compromised compared to one that doesn’t have to gather light from behind a screen. ZTE and Xiaomi lean hard on algorithms for post-processing — you can tell because the live image preview looks much worse than the final picture. The results still look over-processed and unnatural, even if they’re more usable than their predecessors’. Video quality is also bad, because it’s probably too much to ask for these phones to do the processing in real time.

There’s more to the idea than just reducing the size of your phone bezels, though. We spoke to Steven Bathiche from Microsoft’s Applied Sciences group on how the company is working on under-display cameras for an entirely different reason — so you can maintain eye contact while looking at your screen on video calls.

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey