origin of lag in lcd displays price

Display lag is a phenomenon associated with most types of liquid crystal displays (LCDs) like smartphones and computers and nearly all types of high-definition televisions (HDTVs). It refers to latency, or lag between when the signal is sent to the display and when the display starts to show that signal. This lag time has been measured as high as 68 ms,Hz display. Display lag is not to be confused with pixel response time, which is the amount of time it takes for a pixel to change from one brightness value to another. Currently the majority of manufacturers quote the pixel response time, but neglect to report display lag.

For older analog cathode ray tube (CRT) technology, display lag is nearly zero, due to the nature of the technology, which does not have the ability to store image data before display. The picture signal is minimally processed internally, simply for demodulation from a radio-frequency (RF) carrier wave (for televisions), and then splitting into separate signals for the red, green, and blue electron guns, and for the timing of the vertical and horizontal sync. Image adjustments typically involve reshaping the signal waveform but without storage, so the image is written to the screen as fast as it is received, with only nanoseconds of delay for the signal to traverse the wiring inside the device from input to the screen.

For modern digital signals, significant computer processing power and memory storage is needed to prepare an input signal for display. For either over-the-air or cable TV, the same analog demodulation techniques are used, but after that, then the signal is converted to digital data, which must be decompressed using the MPEG codec, and rendered into an image bitmap stored in a frame buffer.

For progressive scan display modes, the signal processing stops here, and the frame buffer is immediately written to the display device. In its simplest form, this processing may take several microseconds to occur.

For interlaced video, additional processing is frequently applied to deinterlace the image and make it seem to be clearer or more detailed than it actually is. This is done by storing several interlaced frames and then applying algorithms to determine areas of motion and stillness, and to either merge interlaced frames for smoothing or extrapolate where pixels are in motion, the resulting calculated frame buffer is then written to the display device.

De-interlacing imposes a delay that can be no shorter than the number of frames being stored for reference, plus an additional variable period for calculating the resulting extrapolated frame buffer; delays of 16-32ms are common.

While the pixel response time of the display is usually listed in the monitor"s specifications, no manufacturers advertise the display lag of their displays, likely because the trend has been to increase display lag as manufacturers find more ways to process input at the display level before it is shown. Possible culprits are the processing overhead of HDCP, Digital Rights Management (DRM), and also DSP techniques employed to reduce the effects of ghosting – and the cause may vary depending on the model of display. Investigations have been performed by several technology-related websites, some of which are listed at the bottom of this article.

LCD, plasma, and DLP displays, unlike CRTs, have a native resolution. That is, they have a fixed grid of pixels on the screen that show the image sharpest when running at the native resolution (so nothing has to be scaled full-size which blurs the image). In order to display non-native resolutions, such displays must use video scalers, which are built into most modern monitors. As an example, a display that has a native resolution of 1600x1200 being provided a signal of 640x480 must scale width and height by 2.5x to display the image provided by the computer on the native pixels. In order to do this, while producing as few artifacts as possible, advanced signal processing is required, which can be a source of introduced latency. Interlaced video signals such as 480i and 1080i require a deinterlacing step that adds lag. Anecdotallyprogressive scanning mode. External devices have also been shown to reduce overall latency by providing faster image-space resizing algorithms than those present in the LCD screen.

Many LCDs also use a technology called "overdrive" which buffers several frames ahead and processes the image to reduce blurring and streaks left by ghosting. The effect is that everything is displayed on the screen several frames after it was transmitted by the video source.

Display lag can be measured using a test device such as the Video Signal Input Lag Tester. Despite its name, the device cannot independently measure input lag. It can only measure input lag and response time together.

Lacking a measurement device, measurement can be performed using a test display (the display being measured), a control display (usually a CRT) that would ideally have negligible display lag, a computer capable of mirroring an output to the two displays, stopwatch software, and a high-speed camera pointed at the two displays running the stopwatch program. The lag time is measured by taking a photograph of the displays running the stopwatch software, then subtracting the two times on the displays in the photograph. This method only measures the difference in display lag between two displays and cannot determine the absolute display lag of a single display. CRTs are preferable to use as a control display because their display lag is typically negligible. However, video mirroring does not guarantee that the same image will be sent to each display at the same point in time.

In the past it was seen as common knowledge that the results of this test were exact as they seemed to be easily reproducible, even when the displays were plugged into different ports and different cards, which suggested that the effect is attributable to the display and not the computer system. An in depth analysis that has been released on the German website Prad.de revealed that these assumptions have been wrong. Averaging measurements as described above lead to comparable results because they include the same amount of systematic errors. As seen on different monitor reviews the so determined values for the display lag for the very same monitor model differ by margins up to 16 ms or even more.

To minimize the effects of asynchronous display outputs (the points of time an image is transferred to each monitor is different or the actual used frequency for each monitor is different) a highly specialized software application called SMTT

Several approaches to measure display lag have been restarted in slightly changed ways but still reintroduced old problems, that have already been solved by the former mentioned SMTT. One such method involves connecting a laptop to an HDTV through a composite connection and run a timecode that shows on the laptop"s screen and the HDTV simultaneously and recording both screens with a separate video recorder. When the video of both screens is paused, the difference in time shown on both displays have been interpreted as an estimation for the display lag.16 ms or even more.

Display lag contributes to the overall latency in the interface chain of the user"s inputs (mouse, keyboard, etc.) to the graphics card to the monitor. Depending on the monitor, display lag times between 10-68 ms have been measured. However, the effects of the delay on the user depend on each user"s own sensitivity to it.

Display lag is most noticeable in games (especially older video-game consoles), with different games affecting the perception of delay. For instance, in PvE, a slight input delay is not as critical compared to PvP, or to other games favoring quick reflexes like

If the game"s controller produces additional feedback (rumble, the Wii Remote"s speaker, etc.), then the display lag will cause this feedback to not accurately match up with the visuals on-screen, possibly causing extra disorientation (e.g. feeling the controller rumble a split second before a crash into a wall).

TV viewers can be affected as well. If a home theater receiver with external speakers is used, then the display lag causes the audio to be heard earlier than the picture is seen. "Early" audio is more jarring than "late" audio. Many home-theater receivers have a manual audio-delay adjustment which can be set to compensate for display latency.

Many televisions, scalers and other consumer-display devices now offer what is often called a "game mode" in which the extensive preprocessing responsible for additional lag is specifically sacrificed to decrease, but not eliminate, latency. While typically intended for videogame consoles, this feature is also useful for other interactive applications. Similar options have long been available on home audio hardware and modems for the same reason. Connection through VGA cable or component should eliminate perceivable input lag on many TVs even if they already have a game mode. Advanced post-processing is non existent on analog connection and the signal traverses without delay.

A television may have a picture mode that reduces display lag for computers. Some Samsung and LG televisions automatically reduce lag for a specific input port if the user renames the port to "PC".

LCD screens with a high response-time value often do not give satisfactory experience when viewing fast-moving images (they often leave streaks or blur; called ghosting). But an LCD screen with both high response time and significant display lag is unsuitable for playing fast-paced computer games or performing fast high-accuracy operations on the screen, due to the mouse cursor lagging behind.

One of the areas where the A-MVA panel does extremely well is in the areas of display lag and pixel response time. Just to recap, you may have heard complaints about "input lag" on various LCDs, so that"s one area we look at in our LCD reviews. We put input lag in quotation marks because while many people call it "input lag", the reality is that this lag occurs somewhere within the LCD panel circuitry, or perhaps even at the level of the liquid crystals. Where this lag occurs isn"t the concern; instead, we just want to measure the duration of the lag. That"s why we prefer to call it "processing lag" or "display lag".

To test for display lag, we run the Wings of Fury benchmark in 3DMark03, with the output set to the native LCD resolution - in this case 1920x1200. Our test system is a quad-core Q6600 running a Radeon HD 3870 on a Gigabyte GA-X38-DQ6 motherboard - we had to disable CrossFire support in order to output the content to both displays. We connect the test LCD and a reference LCD to two outputs from the Radeon 3870 and set the monitors to run in clone mode.

The reference Monitor is an HP LP3065, which we have found to be one of the best LCDs we currently possess in terms of not having display lag. (The lack of a built-in scaler probably has something to do with this.) While we know some of you would like us to compare performance to a CRT, that"s not something we have around our offices anymore. Instead, we are looking at relative performance, and it"s possible that the HP LP3065 has 20ms of lag compared to a good CRT - or maybe not. Either way, the relative lag is constant, so even if a CRT is faster at updating, we can at least see if an LCD is equal to or better than our reference display.

While the benchmark is looping, we snap a bunch of pictures of the two LCDs sitting side-by-side (using a relatively fast shutter speed). 3DMark03 shows the runtime with a resolution of 10ms at the bottom of the display, and we can use this to estimate whether a particular LCD has more or less processing lag than our reference LCD. We sort through the images and discard any where the times shown on the LCDs are not clearly legible, until we are left with 10 images for each test LCD. We record the difference in time relative to the HP LP3065 and average the 10 results to come up with an estimated processing lag value, with lower numbers being better. Negative numbers indicate a display is faster than the HP LP3065, while positive numbers mean the HP is faster and has less lag.

It"s important to note that this is merely an estimate - whatever the reference monitor happens to be, there are some inherent limitations. For one, LCDs only refresh their display 60 times per second, so we cannot specifically measure anything less than approximately 17ms with 100% accuracy. Second, the two LCDs can have mismatched vertical synchronizations, so it"s entirely possible to end up with a one frame difference on the time readout because of this. That"s why we average the results of 10 images, and we are confident that our test procedure can at least show when there is a consistent lag/internal processing delay. Here is a summary of our results for the displays we have tested so far.

As you can see, all of the S-PVA panels we have tested to date show a significant amount of input lag, ranging from 20ms up to 40ms. In contrast, the TN and S-IPS panels show little to no processing lag (relative to the HP LP3065). The BenQ FP241VW performs similarly to the TN and IPS panels, with an average display lag of 2ms - not something you would actually notice compared to other LCDs. Obviously, if you"re concerned with display lag at all, you"ll want to avoid S-PVA panels for the time being. That"s unfortunate, considering S-PVA panels perform very well in other areas.

Despite what the manufacturers might advertise as their average pixel response time, we found most of the LCDs are basically equal in this area - they all show roughly a one frame "lag", which equates to a response time of around 16ms. In our experience, processing lag is far more of a concern than pixel response times. Taking a closer look at just the FP241VW, we can see the typical one frame lag in terms of pixel response time. However, the panel does appear to be a little faster in response time than some of the other panels we"ve tested (notice how the "ghost image" isn"t as visible as on the HP LP3065), and we didn"t see parts of three frames in any of the test images.

After the initial article went live, one of our readers who works in the display industry sent me an email. He provides some interesting information about the causes of image lag. Below is an (edited) excerpt from his email. (He wished to remain anonymous.)

PVA and MVA have inherent drawbacks with respect to LCD response time, especially gray-to-gray. To address this shortcoming, companies have invested in ASICs that perform a trick generically referred to as "overshoot." The liquid crystal (LC) material in *VA responds sluggishly to small voltage changes (a change from one gray level to another). To fix this, the ASIC does some image processing and basically applies an overvoltage to the electrodes of the affected pixel to spur the LC material into rapid movement. Eventually the correct settling voltage is applied to hold the pixel at the required level matching the input drive level.

It"s very complicated math taking place in the ASIC in real time. It works well but with an important caveat: it requires a frame buffer. What this means is that as video comes into the panel, there is a memory device that can capture one whole video frame and hold it. After comparing it to the next incoming frame, the required overshoot calculations are made. Only then is the first captured frame released to the panel"s timing controller, which is when the frame is rendered to the screen. As you may have already guessed, that causes at least one frame time worth of lag (17ms).

Some companies discovered some unintended artifacts in their overshoot calculations and the only way they saw to correct these was to allow for their algorithm to look ahead by two frames instead of one. So they had to up the memory of the frame buffer and now they started capturing and holding not one but two frames upon which they make their complex overshoot predictions to apply the corrected pixel drive levels and reduce gray-to-gray response time (at the expense of lag time). Again, it works very well for improving response time, but at the expense of causing lag, which gamers hate. That in a nutshell is the basis of around 33ms of the lag measured with S-PVA.

Not every display uses this approach, but this could account for the increase in display lag between earlier S-PVA and later S-PVA panels. It"s also important to note that I tested the Dell 2408WFP revision A00, and apparently revision A01 does not have as much lag. I have not been able to confirm this personally, however. The above also suggest that displays designed to provide a higher image quality through various signal processing techniques could end up with more display lag caused by the microchip and microcode, which makes sense. Now all we need are better algorithms and technologies in order to reduce the need for all of this extra image processing -- or as we have seen with some displays (particularly HDTVs), the ability to disable the image processing.

Now, let"s talk about how we measure the input lag. It"s a rather simple test because everything is done by our dedicated photodiode tooland special software. We use this same tool for our response time tests, but it measures something differently with those. For the input lag, we place the photodiode tool at the center of the screen because that"s where it records the data in the middle of the refresh rate cycle, so it skews the results to the beginning or end of the cycle. We connect our test PC to the tool and the TV. The tool flashes a white square on the screen and records the amount of time it takes until the screen starts to change the white square; this is an input lag measurement. It stops the measurement the moment the pixels start to change color, so we don"t account for the response time during our testing. It records multiple data points, and our software records an average of all the measurements, not considering any outliers.

When a TV displays a new image, it progressively displays it on the screen from top to bottom, so the image first appears at the top. As we have the photodiode tool placed in the middle, it records the image when it"s halfway through its refresh rate cycle. On a 120Hz TV, it displays 120 images every second, so every image takes 8.33 ms to be displayed on the screen. Since we have the tool in the middle of the screen, we"re measuring it halfway through the cycle, so it takes 4.17 ms to get there; this is the minimum input lag we can measure on a 120Hz TV. If we measure an input lag of 5.17 ms, then in reality it"s only taking an extra millisecond of lag to appear of the screen. For a 60Hz TV, the minimum is 8.33 ms.

Some people may confuse our response time and our input lag tests. For input lag, we measure the time it takes from when the photodiode tool sends the signal to when it appears on-screen. We use flashing white squares, and the tool stops the measurement the moment the screen changes color so that it doesn"t include the response time measurement. As for the response time test, we use grayscale slides, and this test is to measure the time it takes to make a full transition from one gray shade to the next. In simple words, the input lag test stops when the color on the screen changes, and the response time starts when the colors change.

This test measures the input lag of 1080p signals with a 60Hz refresh rate. This is especially important for older console games (like the PS4 or Xbox One) or PC gamers who play with a lower resolution at 60Hz. As with other tests, this is done in Game Mode, and unless otherwise stated, our tests are done in SDR.

We repeat the same process but with Game Mode disabled. This is to show the difference between in and out of Game Mode. It could be important if you scroll a lot through your TV"s smart OS and you easily notice delay, so if you find it"s too high and it"s bothering you, simply switch into Game Mode when you need to scroll through menus.

This result can also be important if you want to play video games with the TV"s full image processing. You might consider this if you"re playing a non-reaction-based game.

This result is important if you play 1440p games, like from an Xbox or a PC. However, 1440p games are still considered niche, and not all TVs support this resolution, so we can"t measure the 1440p input lag of those.

The 4k @ 60Hz input lag is probably the most important result for most console gamers. Along with 1080p @ 60Hz input lag, it carries the most weight in the final scoring since most gamers are playing at this resolution. We expect this input lag to be lower than the 4k @ 60Hz with HDR, chroma 4:4:4, or motion interpolation results because it requires the least amount of image processing.

With the PC sending a 4k @ 60Hz signal, we use an HDFury Linker to add an HDR signal. This is important if you play HDR games, and while it may add some extra lag, it"s still low for most TVs.

The average input lag when all the TV settings are optimized to reduce it at this specific resolution with proper full 4:4:4 chroma, without subsampling.

This test is important for people wanting to use the TV as a PC monitor. Chroma 4:4:4 is a video signal format that doesn"t use any image compression, which is necessary if you want proper text clarity. We want to know how much delay is added, but for nearly all of our TVs, it doesn"t add any delay at all compared to the 4k @ 60Hz input lag.

Like with 1080p @ 60Hz Outside Game Mode, we measure the input lag outside of Game Mode in 4k. Since most TVs have a native 4k resolution, this number is more important than the 1080p lag while you"re scrolling through the menus.

Motion interpolation is an image processing technique that increases the frame rate to a higher one, like if you want to increase a 30 fps video up to 60 fps. However, for most TVs, you need to disable the Game Mode to enable the motion interpolation setting, as only Samsung offers motion interpolation in Game Mode. As such, most TVs will have a high input lag with motion interpolation. Also, we measure this with the motion interpolation settings at their highest because we want to see how the input lag will increase at the strongest, like a worst-case scenario.

We repeat most of the same tests but with 120 fps signals instead. This is especially important for gaming on some gaming consoles, like the Xbox Series X or Xbox One X, as some other devices don"t output signals at 120 fps. The 120Hz input lag should be around half the 60Hz input lag, but it"s not going to be exactly half.

Once again, this result is only important for PC and Xbox gamers because they use 1440p signals. Not all TVs support this resolution either, so we can"t always test for it.

This test is important if you"re a gamer with an HDMI 2.1 graphics card or console. Since most 4k @ 120Hz signals require HDMI 2.1 bandwidth, you don"t have to worry about this if your TV or gaming console is limited to HDMI 2.0. For this test, we use our HDMI 2.1 PC with an NVIDIA RTX 3070 graphics card because we need an HDMI 2.1 source to test it.

We also measure the input lag with any variable refresh rate (VRR) support enabled, if the TV has it. VRR is a feature gamers use to match the TV"s refresh rate with the frame rate of the game, even if the frame rate drops. Enabling VRR could potentially add lag, so that"s why we measure it, but most TVs don"t have any issue with this. We measure this test by setting the TV to its maximum refresh rate and enabling one of its VRR formats, like FreeSync or G-SYNC.

Input lag is not an official spec advertised by most TV companies because it depends on two varying factors: the type of source and the settings of the television. The easiest way you can measure it is by connecting a computer to the TV and displaying the same timer on both screens. Then, if you take a picture of both screens, the time difference will be your input lag. This is, however, an approximation, because your computer does not necessarily output both signals at the same time. In this example image, an input lag of 40 ms (1:06:260 – 1:06:220) is indicated. However, our tests are a lot more accurate than that because of our tool.

Most people will only notice delays when the TV is out of Game Mode, but some gamers might be more sensitive to input lag even in Game Mode. Keep in mind that the input lag of the TV isn"t the absolute lag of your entire setup; there"s still your PC/console and your keyboard/controller. Every device adds a bit of delay, and the TV is just one piece in a line of electronics that we use while gaming. If you want to know how much lag you"re sensitive to, check out this input lag simulator. You can simulate what it"s like to add a certain amount of lag, but keep in mind this tool is relative to your current setup"s lag, so even if you set it to 0 ms, there"s still the default delay.

The most important setting to ensure you get the lowest input lag possible is the Game Mode setting. This varies between brands; some have Game Mode as its own setting that you can enable within any picture mode, while others have a Game picture mode. Go through the settings on your TV to see which it is. You"ll know you have the right setting when the screen goes black for a second because that"s the TV putting itself into Game Mode.

Many TVs have an Auto Low Latency Mode feature that automatically switches the TV into Game Mode when you launch a game from a compatible device. Often, you need to enable a certain setting for it to work.

Some peripherals like Bluetooth mice or keyboards add lag because Bluetooth connections have inherent lag, but those are rarely used with TVs anyways.

Input lag is the time it takes a TV to display an image on the screen from when it first receives the signals. It"s important to have low input lag for gaming, and while high input lag may be noticeable if you"re scrolling through Netflix or other apps, it"s not as important for that use. We test for input lag using a special tool, and we measure the input lag at different resolutions, refresh rates, and with different settings enabled to see how changing the signal type can affect the input lag.

When you"re using a monitor, you want your actions to appear on the screen almost instantly, whether you"re typing, clicking through websites, or gaming. If you have high input lag, you"ll notice a delay from the time you type something on your keyboard or when you move your mouse to when it appears on the screen, and this can make the monitor almost unusable.

For gamers, low input lag is even more important because it can be the difference between winning and losing in games. A monitor"s input lag isn"t the only factor in the total amount of input lag because there"s also delay caused by your keyboard/mouse, PC, and internet connection. However, having a monitor with low input lag is one of the first steps in ensuring you get a responsive gaming experience.

Any monitor adds at least a few milliseconds of input lag, but most of the time, it"s small enough that you won"t notice it at all. There are some cases where the input lag increases so much to the point where it becomes noticeable, but that"s very rare and may not necessarily only be caused by the monitor. Your peripherals, like keyboards and mice, add more latency than the monitor, so if you notice any delay, it"s likely because of those and not your screen.

There"s no definitive amount of input lag when people will start noticing it because everyone is different. A good estimate of around 30 ms is when it starts to become noticeable, but even a delay of 20 ms can be problematic for reaction-based games. You can try this tool that adds lag to simulate the difference between high and low input lag. You can use it to estimate how much input lag bothers you, but keep in mind this tool is relative and adds lag to the latency you already have.

There are three main reasons why there"s input lag during computer use, and it isn"t just the monitor that has input lag. There"s the acquisition of the image, the processing, and finally actually displaying it.

The acquisition of the image has to do with the source and not with the monitor. The more time it takes for the monitor to receive the source image, the more input lag there"ll be. This has never really been an issue with PCs since previous analog signals were virtually instant, and current digital interfaces like DisplayPort and HDMI have next to no inherent latency. However, some devices like wireless mice or keyboards may add delay. Bluetooth connections especially add latency, so if you want the lowest latency possible in the video acquisition phase, you should use a wired mouse or keyboard or get something wireless with very low latency.

Once the image is in a format that the video"s processor understands, it will apply at least some processing to alter the image somehow. A few examples:

The time this step takes is affected by the speed of the video processor and the total amount of processing. Although you can"t control the processor speed, you can control how many operations it needs to do by enabling and disabling settings. Most picture settings won"t affect the input lag, and monitors rarely have any image processing, which is why the input lag on monitors tends to be lower than on TVs. One of these settings that could add delay is variable refresh rate, but most modern monitors are good enough that the lag doesn"t increase much.

Once the monitor has processed the image, it"s ready to be displayed on the screen. This is the step where the video processor sends the image to the screen. The screen can"t change its state instantly, and there"s a slight delay from when the image is done processing to when it appears on screen. Our input lag measurements consider when the image first appears on the screen and not the time it takes for the image to fully appear (which has to do with our Response Time measurements). Overall, the time it takes to display the image has a big impact on the total input lag.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

.jpg)

It"s false argument to begin with and while I"m glad the Op is fighting back. We need to get out of the mindset a display has input lag, to me it doesn"t it"s literally only displaying the signal given to it and since it has no awareness of your inputs I see it as a dumb notion to link the two.

An ungodly crt like gdm-fw900 has no competition stock or tweaked even compared to oled at 1080p. The same for princeton monitors when the few of them existed for customers and not just big companies or very pricy clients could be gotten. I say that from experience and I"ve yet to see any flat panel in my history post crts or sed demonstrations that have topped either.

how about if we have topic like this we inform people a little more of options in the past that LCD and flat panels made us regress in, such as high refreshrates. That"s huge caveat to leave out to people that CRTs had no problem with high refreshrates even in the 90s. Not only that refreshrate as we now know from the blur buster studies has a direct impact on the pixel persistence of lcd, thus even for lcds it would seem dumb to me not mention that that factor would change at higher rates. I don"t even need to debate this part that"s a fact and it should be highlighted that 60hz alone in pixel persistence is 16MS. There"s no doubt that in high refreshrate test or ulmb test LCD would have even less input lag, which would only help the argument they aren"t as weak as crts in this area. I know this cause I use said such products and immediately see the difference vs shit 60hz.

I don"t like the tone because while 60hz is common it is not the top of the mountain and not even close. We have 440hz monitors that exist but the test only answers the question at 60hz, which is bare minimun these days they phased out 15hz/30hz screens a long time ago. I laugh when people use the words standards as if we should ignore the larger implications of what the information is telling us. Just like I call out bad networking standards or polcies I will do the same on a subject in which consumers can easily enjoy better benefits of a better display.

Simply due to the nature of the topic I think this should be highlighted and explained well so that the typical fud on this subject even what you acknowledge doesn"t become more muddled.

Don"t get me wrong the claim you make is real and should be fought back but we should also highlight the flaws of said such debate on either end. As a lightboost lover I can"t let this stand and I given my ample technical reasons why.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

For PC monitors and smart TVs, speed largely comes down to pixel response and input lag. They’re both measured in milliseconds, and they’re at least a little interrelated – but they’re not the same thing at all.

First let’s establish the basics. In simple terms, response time (or "pixel response") describes the time taken for a display to change the colour of any given pixel, millions of which make up the overall image. Really broadly, pixel response is all about the look of a display. With a fast response time, moving images will be sharp and clear as opposed to blurry and smeared.

As for input lag, that’s a measure of the delay between signal output from a source device, such as a games console, set top box or PC, and the video image being shown on the display. And it’s all about feel. Does the screen respond quickly to your control inputs in a game? If it does, it has low lag or latency. If there’s a noticeable delay between wiggling a mouse or control pad and on-screen movement, then it probably suffers from significant lag.

Anyway, response and lag don’t apply in quite the same way to all display and panel types, be that OLED vs LCD or TVs and PC monitors (note that TVs and other screens marketed as ‘LED’ are typically LCD panels with LED backlights, not actually LED panels).

How is response time / pixel response measured and what do the numbers really mean? The most common metric of pixel response is known as grey-to-grey, sometimes abbreviated to GtG. As the name implies, it’s not a measure of the time taken for a pixel to fully transition from off to on or from black to white. Instead, GtG pixel response records the time taken to move between two intermediate colors.

What’s more, the industry standard VESA method for measuring GtG response doesn’t even record the full time taken for that intermediate transition. It actually discards the first 10% and last 10% of the transition, recording only the time taken for the middle 80%.

Shown on a graph, the pixel response of an LCD panel follows an ‘S’ curve, with a slightly sluggish immediate response, followed by that rapid middle phase, before response tails off dramatically towards the end of the transition. The net result is the time taken to fully transition from one color to another can be dramatically longer than the quoted GtG response.

There is another measure of pixel response known as MPRT or ‘moving picture response time’. It’s intended to be a better measure of actual perceived blurring based on the abilities of the human eye.

In theory, MPRT response is a direct function of refresh rate. So, a refresh rate of 1000Hz is required to achieve a 1ms MPRT pixel response. However, mitigating measures including black-frame insertion or strobing backlights can improve MPRT response to below the refresh rate of the panel and to the point where it’s typically faster than a screen’s GtG response, at least in terms of quoted specifications.

That achievement, however, comes with several caveats. For starters, those mitigating measures usually don’t work when variable refresh rate or frame synching is enabled. Moreover, screen modes intended to improve MPRT response tend to reduce vibrancy and visual punch. So, it’s often not possible with a given display to achieve the best available MPRT response while maintaining optimal performance in other regards.

The fastest current LCD panels are quoted at 1ms for GtG response and 0.5ms for MPRT response. But independent testing shows a whole different ballgame. Sources including Rtings.com and Linus Tech Tips peg full-transition pixel response from speedy OLED sets like LG C1 and CX panels at around two to three milliseconds, with the bulk of the transition (and thus the GtG equivalent performance) completed in a fraction of a millisecond.

Results for LCD technology vary a little more, probably due to methodology. But the best case scenario for an ultra-fast-IPS LCD monitor, such as the Asus ROG Swift 360Hz PG259QN, by comparison, is around 3ms for the bulk of the transition and 6ms for the full color change while other results push those two metrics out to 6ms and 10ms or more respectively. Either way, OLED is clearly faster.

The refresh rate of a screen puts a hard limit on the minimum latency or input lag it can achieve. To put some numbers on that, most mainstream monitors and TVs refresh at 60Hz or once every 16.67ms. Increase the refresh rate to 120Hz and the screen updates every 8.33ms.

In the PC gaming monitor market, refresh rates up to 360Hz are now available, translating into a new frame every 2.7ms (TVs can be a bit more complicated due to technologies like frame insertion and motion smoothing, though).

To understand why that matters, imagine for a moment playing a game with a refresh rate of just 1Hz – rather than the 60Hz or 120Hz found on a decent gaming TV. Yes, it would be absolutely horrible in terms of rendering smoothness. But you could wiggle your mouse or controller pad around for a full second and get absolutely no response on the screen. Nightmare.Running outside of game mode, the LG C1 is tragically slow at nearly 90ms, which neatly demonstrates just how much impact image processing can have.

Now, 16.67ms might not sound like a long time to wait – but should the screen require any time at all to process the signal, that latency will only increase, as those 16.67ms are also just the latency generated by your display. A PC or games console needs time to process a control input, feed it through the game engine and kick out frames in response. It all adds up.

The other part of the equation is image processing. Until quite recently, that could be death for TVs, because most sets heavily process video by default to adjust and (theoretically) improve the image, whereas PC monitors tend to run with minimal tweaks.

Happily, some TVs now offer a dedicated low-latency game mode with minimal processing. Such TVs tend to be comparable to monitors running at the same refresh rate in terms of lag. The LG C1 OLED TV has been measured as low as 5ms at 120Hz. Intriguingly, running outside of game mode, the C1 is tragically slow at nearly 90ms, which neatly demonstrates just how much impact image processing can have.

In terms of refresh rate, the fastest current PC monitors can hit 360Hz, while the highest refresh TV sets accept an input signal of 120Hz. Some TVs have higher internal refresh rates of 240Hz or more, but in terms of latency or input lag, it’s the signal refresh from the source device that matters.

Long story short, the fastest OLED TVs deliver as little as 5ms of lag, while the quickest PC monitors including the aforementioned Asus panel along with other 360Hz monitors such as the Alienware AW2521H have been clocked at well under 2ms. So while OLED wins out on pixel response, certain LCD monitors have an advantage with input lag.

For OLED TVs, it’s pretty simple. You need a modern set with true 120Hz refresh and a low-latency game mode. For now, there’s simply nothing faster in that market. Pixel response from such displays is beyond reproach, delivering a super crisp, sharp image. In fact, any blurring will largely be a consequence of the limitations of human vision. For latency, 120Hz 4K TVs with OLED are pretty darn good. For most gamers, they’ll feel very slick and responsive. But serious esports players may appreciate something a little bit quicker.

Apart from the differences discussed between GtG response and MPRT, IPS and VA panel types tend not to be entirely comparable. By that we mean that the subjective experience of a 1ms IPS panel is usually that little bit crisper, clearer and cleaner in terms of response than a VA panel. IPS, in short, tends to be faster.

What’s more, pretty much all gaming monitors offer user-configurable overdrive which can accelerate response but also introduce unwanted image quality issues such as overshoot and inverse ghosting. All those caveats aside, the latest 1ms IPS panels deliver the best performance with very low levels of blur, while 1ms VA monitors are just a little behind. The next rung down and probably the slowest you should consider for gaming is 4ms. Depending on the monitor in question, the panel type and the settings used, such screens may not differ that greatly in terms of the subjective experience. But the worst of them will have noticeably more blur than a 1ms display.

Beyond that, you’re into 7ms and beyond territory. On paper, that ought to be fine. But as we’ve seen, even the fastest LCD panels rated at 1ms can be measured at 10 times that long for real-world response. So quoted specifications should be viewed more as a tool with which to categorize screens than set expectations for actual performance.

But what of lag or latency? Most gamers will find a PC monitor with 144Hz refresh offers no noticeable lag and feels seriously slick and super quick. For really competitive esports competition there are small gains to be had from 240Hz and 360Hz displays. But for us? We’d be very happy with either a 120Hz OLED TV or a 144Hz 1ms monitor.Just want a good low-lag screen? Check out the best gaming monitors and best 120Hz TVsToday"s best gaming monitors and 120Hz TVs

I don"t think it will cause you to lose fps. If you are running the GT120 and the games at highest setting, that is your problem, not the LCD. If you get a standard LCD with a 60 or 75 Hz refresh rate, and your games fps is already higher than that, you wouldn"t notice anyways... the human eye can"t keep up over those refresh rates anyways. If your games are lagging, tone down the settings a bit or change the resolution. I still run a 9800Gt in my q8300 system... I turn off AA and set the resolution to 1280x720p or 1600x900 for most games and the quality settings are still on high and most games are over 60fps anyways. Even if your game and GPU can achieve 120 fps, a standard LCD can"t refresh more than 60 fps anyways.

This website is using a security service to protect itself from online attacks. The action you just performed triggered the security solution. There are several actions that could trigger this block including submitting a certain word or phrase, a SQL command or malformed data.

This post may contain affiliate links. Clicking these links will redirect you to Amazon.com, and we will receive a small commission on purchases made through this link.

When playing video games (or even using the computer), input lag can be a hindrance and can affect how these tasks are performed. This phenomenon started to get a lot of press when the Xbox 360 and Playstation 3 came out in 2005/2006, due to these systems pushing the HDTV adoption rate in the common home. Consumers were happy with the increase in picture quality and resolution they were receiving from these televisions, but it came at a price known as input lag. Still to this day, a lot of people don’t realize its there and how it can affect your enjoyment in these applications.

Quite simply, its the delay between a button press on your controller and what results on the display. The amount of delay varies between different displays (and is one of the reasons this website was founded,

Ever played Call of Duty, Halo, or maybe Street Fighter online? If you have, you probably know what a laggy connection feels like. But that’s not all that’s in play here. This feeling is amplifiedbecause of your display as well! Games like Call of Duty and Halo use netcode that hides input lag so your inputs stay responsive, but if there is lag, it affects what happens on the screen (other players jerking around, teleporting, frames dropping, etc), whereas a game like Street Fighter 4 causes input lag if the connection is laggy (this game has to keep frames v-synced due to the nature of the game).

Imagine if Call of Duty or Halo had input lag on top of the de-syncing that occurs due to laggy connections. Not only are you having trouble keeping up with the action warping around the screen, but your controller is not responding as accurately as it should. Street Fighter 4 can nearly double or triple its input lag because of a bad display. A fighting game needs to have instant response times to react and execute combos. Now imagine variable input lag from a bad internet connection, and fixed input lag from a bad gaming display. Sounds horrible right? That combo that you would normally execute on an excellent display

If you want something nicer like a big HDTV, you are sacrificing some responsiveness for more features and a big screen. Thankfully, you aren’t sacrificing much when it comes to some HDTVs. Please refer to GREATrating. These HDTVs will suit almost all of your gaming needs, unless you plan to compete in video game tournaments. Excellent displays are suited for that application. I currently use an ASUS monitor for competitive gaming, and a Samsung HDTV for my regular watching and casual games.

There are a variety of factors, but these are the most common ones:Source resolution being different from the native display resolutionExpensive image processing (motion interpolation)Using the display’s scaler to convert interlaced images to progressive

Hopefully upon reading this article, you gained a little more insight on how a display can affect your gaming performance. Feel free to comment below if you have any questions or concerns. Remember, don’t forget to check out the

A German scientist called Karl Ferdinand Braun invented the earliest version of the CRT in 1897. However, his invention was not isolated, as it was among countless other inventions that took place between the mid-1800s and the late 1900s.

CRT technology isn’t just for displays; it can also be utilized for storage. These storage tubes can hold onto a picture for as long as the tube is receiving electricity.

Like the CRT, the invention of the modern LCD was not a one-man show. It began in 1888 when the Austrian botanist and chemist Friedrich Richard Kornelius Reinitzer discovered liquid crystals.

CRT stands for cathode-ray tube, a TV or PC monitor that produces images using an electron gun. These were the first displays available, but they are now outdated and replaced by smaller, more compact, and energy-efficient LCD display monitors.

In contrast, a Liquid crystal display, or an LCD monitor, uses liquid crystals to produce sharp, flicker-free images. These are now the standard monitors that are giving the traditional CRTs a run for their money.

Although the production of CRT monitors has slowed down, due to environmental concerns and the physical preferences of consumers, they still have several advantages over the new-age LCD monitors. Below, we shed some light on the differences between CRT and LCD displays.

CRTLCDWhat it isAmong the earliest electronic displays that used a cathode ray tubeA flat-panel display that uses the light-modulating properties of liquid crystals

FlickeringFlickering is recognizable by the naked eye because of the monitor’s low refresh rateFlickering is almost negligible thanks to its high refresh rate

CRTs boast a great scaling advantage because they don’t have a fixed resolution, like LCDs. This means that CRTs are capable of handling multiple combinations of resolutions and refresh rates between the display and the computer.

In turn, the monitor is able to bypass any limitations brought about by the incompatibility between a CRT display and a computer. What’s more, CRT monitors can adjust the electron beam to reduce resolution without affecting the picture quality.

On the other hand, LCD monitors have a fixed resolution, meaning they have to make some adjustments to any images sent to them that are not in their native resolution. The adjustments include centering the image on the screen and scaling the image down to the native resolution.

CRT monitors project images by picking up incoming signals and splitting them into audio and video components. More specifically, the video signals are taken through the electron gun and into a single cathode ray tube, through a mesh, to illuminate the phosphorus inside the screen and light the final image.

The images created on the phosphor-coated screen consist of alternating red, blue, and green (RGB) lights, creating countless different hues. The electron gun emits an electron beam that scans the front of the tube repetitively to create and refresh the image at least 100 times every second.

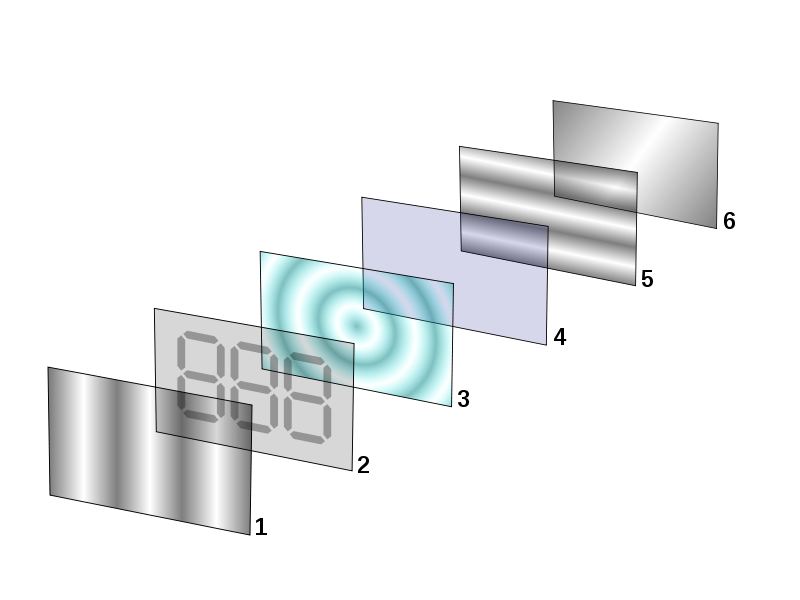

LCD screens, on the other hand, are made of two pieces of polarized glass that house a thin layer of liquid crystals. They work on the principle of blocking light. As a result, when light from a backlight shines through the liquid crystals, the light bends to respond to the electric current.

The liquid crystal molecules are then aligned to determine which color filter to illuminate, thus creating the colors and images you see on the screen. Interestingly, you can find color filters within every pixel, which is made up of three subpixels—red, blue, and green—that work together to produce millions of different colors.

Thanks to the versatility of pixels, LCD screens offer crisper images than CRT monitors. The clarity of the images is a result of the LCD screen’s ability to produce green, blue, and red lights simultaneously, whereas CRTs need to blur the pixels and produce either of the lights exclusively.

The diversity of the pixels also ensures LCD screens produce at least twice as much brightness as CRTs. The light on these screens also remains uninterrupted by sunlight or strong artificial lighting, which reduces general blurriness and eyestrain.

Over time, however, dead pixels negatively affect the LCD screen’s visual displays. Burnout causes these dead pixels, which affect the visual clarity of your screen by producing black or other colored dots in the display.

CRT monitors also have better motion resolution compared to LCDs. The latter reduces resolution significantly when content is in motion due to the slow pixel response time, making the images look blurry or streaky.

With CRTs, you don’t experience any display lag because the images are illuminated on the screen at the speed of light, thus preventing any delays. However, lag is a common problem, especially with older LCD displays.

CRTs are prone to flickeringduring alternating periods of brightness and darkness. LCDs don’t flicker as much thanks to the liquid pixels that retain their state when the screen refreshes.

CRTs have a thick and clunky design that’s quite unappealing. The monitor has a casing or cabinet made of either plastic or metal that houses the cathode ray tube. Then there’s the neck or glass funnel, coated with a conductive coating made using lead oxide.

Leaded glass is then poured on top to form the screen, which has a curvature. In addition, the screen contributes to about 65% of the total weight of a CRT.

LCDs feature low-profile designs that make them the best choice for multiple portable display devices, like smartphones and tablets. LCD displays have a lightweight construction, are portable, and can be made into much larger sizes than the largest CRTs, which couldn’t be made into anything bigger than 40–45 inches.

The invention of the cathode ray tube began with the discovery of cathode beams by Julius Plucker and Johann Heinrich Wilhelm Geissler in 1854. Interestingly, in 1855, Heinrich constructed glass tubes and a hand-crack mercury pump that contained a superior vacuum tube, the “Geissler tube.”

Later, in 1859, Plucker inserted metal plates into the Geissler tube and noticed shadows being cast on the glowing walls of the tube. He also noticed that the rays bent under the influence of a magnet.

Sir William Crookes confirmed the existence of cathode rays in 1878 by displaying them in the “Crookes tube” and showing that the rays could be deflected by magnetic fields.

Later, in 1897, Karl Ferdinand Braun, a German physicist, invented a cathode ray tube with a fluorescent screen and named it the “Braun Tube.” By developing the cathode ray tube oscilloscope, he was the first person to endorse the use of CRT as a display device.

Later, in 1907, Boris Rosing, a Russian scientist, and Vladimir Zworykin used the cathode ray tube in the receiver of a television screen to transmit geometric patterns onto the screen.

LCD displays are a much more recent discovery compared to CRTs. Interestingly, the French professor of mineralogy, Charles-Victor Mauguin, performed the first experiments with liquid crystals between plates in 1911.

George H. Heilmeier, an American engineer, made significant enough contributions towards the LCD invention to be inducted into the Hall of Fame of National Inventors. And, in 1968, he presented the liquid crystal display to the professional world, working at an optimal temperature of 80 degrees Celsius.

Many other inventors worked towards the creation of LCDs. As a result, in the 1970s, new inventions focused on ensuring that LCD displays worked at an optimal temperature. And, in the 1980s, they perfected the crystal mixtures enough to stimulate demand and a promotion boom. The first LCDs were produced in 1971 and 1972 by ILIXCO (now LXD Incorporated).

Although they may come in at a higher price point, LCD displays are more convenient in the long run. They last almost twice as long as CRTs are energy efficient, and their compact and thin size make them ideal for modern-day use.

LCDs are also more affordable compared to other display monitors available today. So, you can go for a CRT monitor for its ease of use, faster response rates, reduced flickering, and high pixel resolution. However, we don’t see why you should look back since there are so many new options that will outperform both CRTs and LCDs.

Gaming monitors are designed to make the output of your graphics card and CPU look as good as possible while gaming. They"re responsible for displaying the final result of all of your computer"s image rendering and processing, yet they can vary widely in their representation of color, motion, and image sharpness. When considering what to look for in a gaming monitor, it"s worth taking the time to understand everything a gaming monitor can do, so you can translate gaming monitor specs and marketing into real-world performance.

Display technology changes over time, but the basic goals of monitor manufacturers remain consistent. We"ll break down each group of monitor features below to isolate their benefits.

Resolution is a key feature of any monitor. It measures the width and height of the screen in terms of pixels, or “picture elements”, the tiny points of illumination that compose an image. A 2,560 × 1,440 screen, for example, has a total of 3,686,400 pixels.

Common resolutions include 1,920 × 1,080 (sometimes called “Full HD” or FHD), 2,560 × 1,440 (“Quad HD”, QHD, or “Widescreen Quad HD”, WQHD), or 3840 × 2160 (UHD, or “4K Ultra HD”). Ultrawide monitors are also available with resolutions such as 2560 x 1080 (UW-FHD) and 3440 x 1440 (UW-QHD), 3840x1080 (DFHD), and 5120x1440 (DQHD).

Sometimes manufacturers only reference one measurement for standard resolutions: 1080p and 1440p refer to height, while 4K refers to width. Any resolution higher than 1,280 × 720 is high definition (HD).

The pixels being counted in these measurements are usually rendered the same way: As squares on a two-dimensional grid. To see this, you can either move closer to (or magnify) the screen until you perceive individual blocks of color, or zoom in on an image until it becomes “pixelated”, and you see a staircase of small squares instead of clean diagonal lines.

As you increase your display resolution, it gets harder to pick out individual pixels with the naked eye, and the clarity of the picture increases in turn.

Beyond increasing the detail onscreen in games or movies, there"s another benefit to higher resolutions. They give you more desktop real estate to work with. That means you get a larger workspace on which to arrange windows and applications.

You might already know that a screen with 4K display resolution doesn"t magically make everything it displays look 4K. If you play a 1080p video stream on it, that content usually won"t look as good a 4K Blu-ray. However, it may still look closer to 4K than it used to, thanks to a process called upscaling.

Upscaling is a way to scale lower-resolution content to a higher resolution. When you play a 1080p video on a 4K monitor, the monitor needs to “fill in” all of the missing pixels that it expects to display (as a 4K monitor has four times as many pixels as 1080p). A built-in scaler interpolates new pixels by examining the values of surrounding pixels. HDTVs often feature more complex upscaling than PC monitors (with line-sharpening and other improvements), as the latter often simply turn one pixel into a larger block of the same pixels. The scaler is likely to cause some blurring and ghosting (double images), especially if you look closely.

Monitors can also change resolution. Modern screens have a fixed number of pixels, which defines their "native resolution" but can also be set to approximate lower resolutions. As you scale down, onscreen objects will look larger and fuzzier, screen real estate will shrink, and visible jaggedness may result from interpolation. (Note that it wasn’t always this way: older analog CRT monitors can actually switch between resolutions without interpolation, as they do not have a set number of pixels.)

Screens with 4K resolution and higher introduce another scaling concern: at ultra-high definition, text and interface elements like buttons can start to look small. This is especially true on smaller 4K screens when using programs that don’t automatically resize their text and UI.

Windows’ screen scaling settings can increase the size of text and layout elements, but at the cost of reducing screen real estate. There’s still a benefit of increased resolution, even when this scaling is used — onscreen content, like an image in an editing program, will appear at 4K resolution even if the menus around it have been rescaled.

Manufacturers measure screen size diagonally, from corner to corner. A larger screen size, in tandem with a higher resolution, means more usable screen space and more immersive gaming experiences.

Players sit or stand close to their monitors, often within 20”-24”. This means that the screen itself fills much more of your vision than an HDTV (when seated at the couch) or a smartphone/tablet. (Monitors boast the best ratio of diagonal screen size to viewing distance among common displays, with the exception of virtual reality headsets). The benefits of 1440p or 4K resolution are more immediately perceptible in this close-range situation.

Basically, you want to find a screen where you never perceive an individual pixel. You can do this using online tools that measure pixel density (in pixels per inch), which tells you the relative “sharpness” of the screen by determining how closely pixels are packed together, or the alternative pixels per degree formula, which automatically compares its measurements against the limits of human vision.

It"s also worth considering your own eyesight and desktop setup. If you have 20/20 vision and your eyes are around 20” from your screen, a 27” 4K panel will provide an immediate visual upgrade. However, if you know your eyesight is worse than 20/20, or you prefer to sit more than 24” away, a 1440p panel may look just as good to you.

A monitor"s aspect ratio is the proportion of width to height. A 1:1 screen would be completely square; the boxy monitors of the 1990s were typically 4:3, or “standard”. They have largely been replaced by widescreen (16:9) and some ultrawide (21:9, 32:9, 32:10) aspect ratios.

Most online content, such as YouTube videos, also defaults to a widescreen aspect ratio. However, you"ll still see horizontal black bars onscreen when watching movies or TV shows shot in theatrical widescreen (2.39:1, wider than 16:9), and vertical black bars when watching smartphone videos shot in thinner “portrait” mode. These black bars preserve the original proportions of the video without stretching or cropping it.

UltrawidesWhy opt for an ultrawide screen over regular widescreen? They offer a few advantages: They fill more of your vision, they can provide a movie-watching experience closer to the theater (as 21:9 screens eliminate “letterboxing” black bars for widescreen films), and they let you expand field of view (FOV) in games without creating a “fisheye” effect. Some players of first-person games prefer a wider FOV to help them spot enemies or immerse themselves in the game environment. (But note that some popular FPS games do not support high FOV settings, as they can give players an advantage).

Curved screens are ano

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey