not supported with gsync lcd panel brands

Information on this error message is REALLY sketchy online. Some say that the G-Sync LCD panel is hardwired to the dGPU and that the iGPU is connected to nothing. Some say that dGPU is connected to the G-Sync LCD through the iGPU. Some say that they got the MUX switch working after an intention ordering of bios update, iGPU drivers then dGPU drivers on a clean install.

I"m suspecting that if I connect an external 60hz IPS monitor to one of the display ports on the laptop and make it the only display, the Fn+F7 key will actually switch the graphics because the display is not a G-Sync LCD panel. Am I right on this?

If I"m right on this, does that mean that if I purchase this laptop, order a 15inch Alienware 60hz IPS screen and swap it with the FHD 120+hz screen currently inside, I will also continue to have MUX switch support and no G-Sync? The price for these screens is not outrageous.

At first i thought that maybe i was sent a laptop with a g-sync display but when i checked in device manager the display is listed as "generic pnp display" no mention of g-sync yet i cant seem to be able to turn off the gpu and whenever i press fn+f7 i get the following message "not supported with g-sync ips display" even though the display is not a g-sync display.

Make sure the monitor supports Nvidia’s G-Sync technology - a list of supported monitors at the time of this article can be found on Nvidia"s website.

Make sure a DisplayPort cable is being used - G-Sync is only compatible with DisplayPort. It must be a standard DisplayPort cable using no adapters or conversions. HDMI, DVI and VGA are not supported.

Under the Display tab on the left side of the Nvidia Control Panel, choose Set up G-Sync, followed by Enable G-Sync, G-Sync Compatible checkbox. Note: If the monitor has not been validated as G-Sync Compatible, select the box under Display Specific Settings to force G-Sync Compatible mode on. See the warning NOTE at the end of the article before proceeding.

NOTE: If the monitor supports VRR (Variable Refresh Rate) technologies but is not on the list above, use caution before proceeding. It may still work, however there may be issues when using the technology. Known issues include blanking, pulsing, flickering, ghosting and visual artifacts.

Unlike monitors, which support G-SYNC Compatible mode with 10-Series graphics cards, G-SYNC Compatible mode on TVs requires at least a 16-Series graphics card. On most TVs, you"ll also have to enable FreeSync or Adaptive Sync for it to work. Some newer models have separate toggles for FreeSync and G-SYNC Compatible.

To enable FreeSync, you must first select "G-SYNC Compatible" from the Monitor Technology setting under NVIDIA Control Panel. Once this setting is enabled, you should see a new option, Set up G-SYNC, appear under the Display menu. From there, you can enable G-SYNC for either full-screen mode or both full-screen and windowed mode.

NVIDIA updates their list of supported TVs and Monitors frequently; you can see the current list on NVIDIA"s website. If your TV is supposed to be supported but isn"t working, check the following:

Make sure your graphics card drivers are up to date. This is how NVIDIA adds new models to the supported list. Note that the list on NVIDIA"s website isn"t always perfectly up-to-date; there may be other models supported that haven"t been listed.

TVs are becoming more and more advanced, with impressive new gaming features, including G-SYNC Compatibility. New technologies like HDMI Forum VRR and G-SYNC Compatibility blur the line between TVs and monitors, and TVs often work with certain technologies without being officially certified. We check for G-SYNC Compatibility on all TVs and test the range of refresh rates the TV supports. Understanding the range a TV supports will give you a better idea of what to expect when gaming with certain sources. The addition of Adaptive Sync to NVIDIA"s drivers is a welcome change and represents a significant policy shift at NVIDIA.

If you want smooth gameplay without screen tearing and you want to experience the high frame rates that your Nvidia graphics card is capable of, Nvidia’s G-Sync adaptive sync tech, which unleashes your card’s best performance, is a feature that you’ll want in your next monitor.

To get this feature, you can spend a lot on a monitor with G-Sync built in, like the high-end $1,999 Acer Predator X27, or you can spend less on a FreeSync monitor that has G-Sync compatibility by way of a software update. (As of this writing, there are 15 monitors that support the upgrade.)

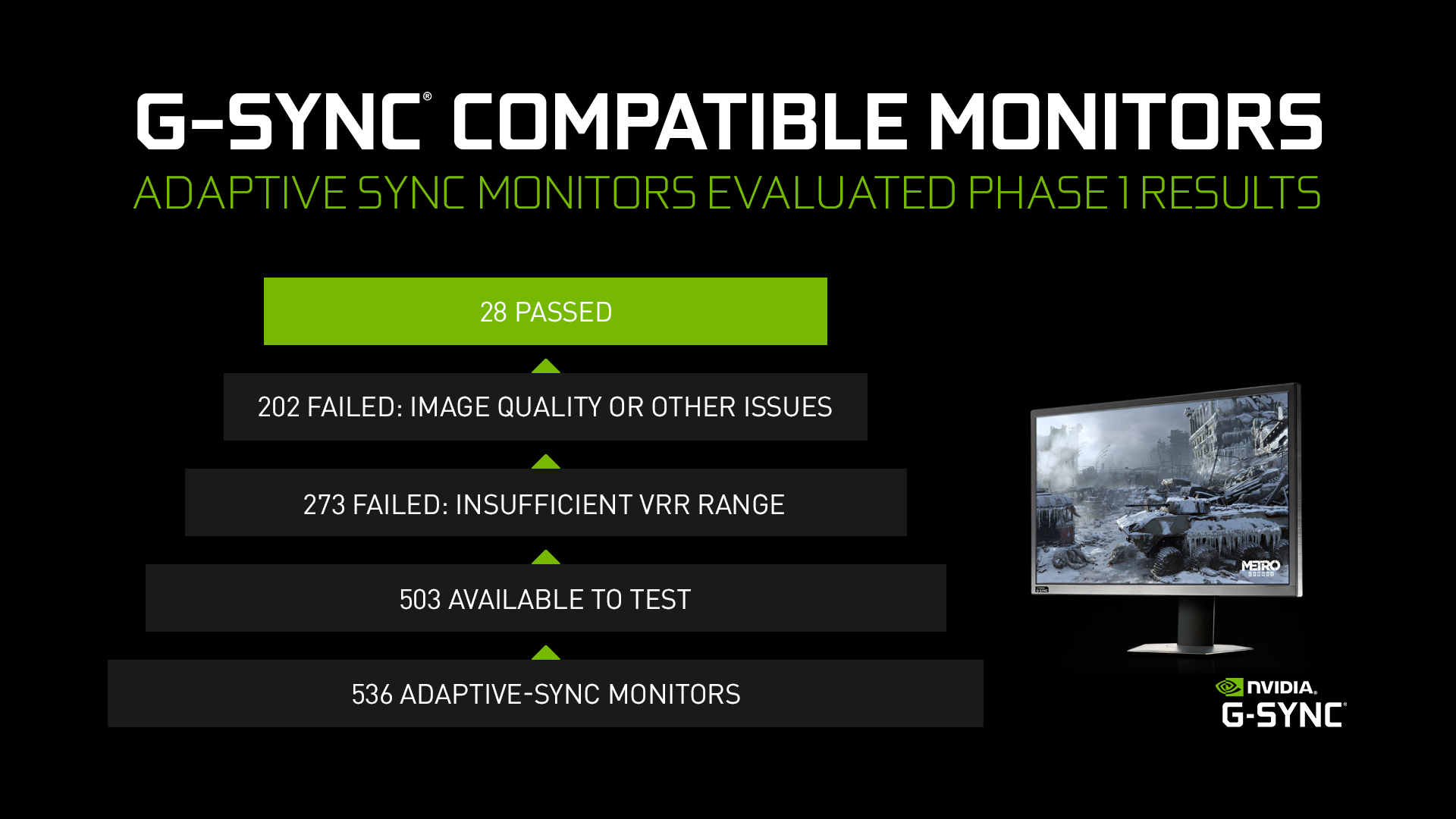

However, there are still hundreds of FreeSync models that will likely never get the feature. According to Nvidia, “not all monitors go through a formal certification process, display panel quality varies, and there may be other issues that prevent gamers from receiving a noticeably improved experience.”

But even if you have an unsupported monitor, it may be possible to turn on G-Sync. You may even have a good experience — at first. I tested G-Sync with two unsupported models, and, unfortunately, the results just weren’t consistent enough to recommend over a supported monitor.

The 32-inch AOC CQ32G1 curved gaming monitor, for example, which is priced at $399, presented no issues when I played Apex Legends and Metro: Exodus— at first. Then some flickering started appearing during gameplay, though I hadn’t made any changes to the visual settings. I also tested it with Yakuza 0,which, surprisingly, served up the worst performance, even though it’s the least demanding title that I tested. Whether it was in full-screen or windowed mode, the frame rate was choppy.

Another unsupported monitor, the $550 Asus MG279Q, handled both Metro: Exodus and Forza Horizon 4 without any noticeable issues. (It’s easy to confuse the MG279Q for the Asus MG278Q, which is on Nvidia’s list of supported FreeSync models.) In Nvidia’s G-Sync benchmark, there was significant tearing early on, but, oddly, I couldn’t re-create it.

Before you begin, note that in order to achieve the highest frame rates with or without G-Sync turned on, you’ll need to use a DisplayPort cable. If you’re using a FreeSync monitor, chances are good that it came with one. But if not, they aren’t too expensive.

First, download and install the latest driver for your GPU, either from Nvidia’s website or through the GeForce Experience, Nvidia’s Windows 10 app that can tweak graphics settings on a per-game basis. All of Nvidia’s drivers since mid-January 2019 have included G-Sync support for select FreeSync monitors. Even if you don’t own a supported monitor, you’ll probably be able to toggle G-Sync on once you install the latest driver. Whether it will work well after you do turn the feature on is another question.

Once the driver is installed, open the Nvidia Control Panel. On the side column, you’ll see a new entry: Set up G-Sync. (If you don’t see this setting, switch on FreeSync using your monitor’s on-screen display. If you still don’t see it, you may be out of luck.)

Check the box that says “Enable G-Sync Compatible,” then click “Apply: to activate the settings. (The settings page will inform you that your monitor is not validated by Nvidia for G-Sync. Since you already know that is the case, don’t worry about it.)

Nvidia offers a downloadable G-Sync benchmark, which should quickly let you know if things are working as intended. If G-Sync is active, the animation shouldn’t exhibit any tearing or stuttering. But since you’re using an unsupported monitor, don’t be surprised if you see some iffy results. Next, try out some of your favorite games. If something is wrong, you’ll realize it pretty quickly.

There’s a good resource to check out on Reddit, where its PC community has created a huge list of unsupported FreeSync monitors, documenting each monitor’s pros and cons with G-Sync switched on. These real-world findings are insightful, but what you experience will vary depending on your PC configuration and the games that you play.

Vox Media has affiliate partnerships. These do not influence editorial content, though Vox Media may earn commissions for products purchased via affiliate links. For more information, seeour ethics policy.

It’s difficult to buy a computer monitor, graphics card, or laptop without seeing AMD FreeSync and Nvidia G-Sync branding. Both promise smoother, better gaming, and in some cases both appear on the same display. But what do G-Sync and FreeSync do, exactly – and which is better?

Most AMD FreeSync displays can sync with Nvidia graphics hardware, and most G-Sync Compatible displays can sync with AMD graphics hardware. This is unofficial, however.

The first problem is screen tearing. A display without adaptive sync will refresh at its set refresh rate (usually 60Hz, or 60 refreshes per second) no matter what. If the refresh happens to land between two frames, well, tough luck – you’ll see a bit of both. This is screen tearing.

Screen tearing is ugly and easy to notice, especially in 3D games. To fix it, games started to use a technique called V-Syncthat locks the framerate of a game to the refresh rate of a display. This fixes screen tearing but also caps the performance of a game. It can also cause uneven frame pacing in some situations.

Adaptive sync is a better solution. A display with adaptive sync can change its refresh rate in response to how fast your graphics card is pumping out frames. If your GPU sends over 43 frames per second, your monitor displays those 43 frames, rather than forcing 60 refreshes per second. Adaptive sync stops screen tearing by preventing the display from refreshing with partial information from multiple frames but, unlike with V-Sync, each frame is shown immediately.

VESA Adaptive Sync is an open standard that any company can use to enable adaptive sync between a device and display. It’s used not only by AMD FreeSync and Nvidia G-Sync Compatible monitors but also other displays, such as HDTVs, that support Adaptive Sync.

AMD FreeSync and Nvidia G-Sync Compatible are so similar, in fact, they’re often cross compatible. A large majority of displays I test with support for either AMD FreeSync or Nvidia G-Sync Compatible will work with graphics hardware from the opposite brand.

This is how all G-Sync displays worked when Nvidia brought the technology to market in 2013. Unlike Nvidia G-Sync Compatible monitors, which often (unofficially) works with AMD Radeon GPUs, G-Sync is unique and proprietary. It only supports adaptive sync with Nvidia graphics hardware.

It’s usually possible to switch sides if you own an AMD FreeSync or Nvidia G-Sync Compatible display. If you buy a G-Sync or G-Sync Ultimate display, however, you’ll have to stick with Nvidia GeForce GPUs. (Here’s our guide to the best graphics cards for PC gaming.)

G-Sync and G-Sync Ultimate support the entire refresh range of a panel – even as low as 1Hz. This is important if you play games that may hit lower frame rates, since Adaptive Sync matches the display refresh rate with the output frame rate.

For example, if you’re playing Cyberpunk 2077 at an average of 30 FPS on a 4K display, that implies a refresh rate of 30Hz – which falls outside the range VESA Adaptive Sync supports. AMD FreeSync and Nvidia G-Sync Compatible may struggle with that, but Nvidia G-Sync and G-Sync Ultimate won’t have a problem.

AMD FreeSync Premium and FreeSync Premium Pro have their own technique of dealing with this situation called Low Framerate Compensation. It repeats frames to double the output such that it falls within a display’s supported refresh rate.

Other differences boil down to certification and testing. AMD and Nvidia have their own certification programs that displays must pass to claim official compatibility. This is why not all VESA Adaptive Sync displays claim support for AMD FreeSync and Nvidia G-Sync Compatible.

This is a bunch of nonsense. Neither has anything to do with HDR, though it can be helpful to understand that some level of HDR support is included in those panels. The most common HDR standard, HDR10, is an open standard from the Consumer Technology Association. AMD and Nvidia have no control over it. You don’t need FreeSync or G-Sync to view HDR, either, even on each company’s graphics hardware.

Both standards are plug-and-play with officially compatible displays. Your desktop’s video card will detect that the display is certified and turn on AMD FreeSync or Nvidia G-Sync automatically. You may need to activate the respective adaptive sync technology in your monitor settings, however, though that step is a rarity in modern displays.

Displays that support VESA Adaptive Sync, but are not officially supported by your video card, require you dig into AMD or Nvidia’s driver software and turn on the feature manually. This is a painless process, however – just check the box and save your settings.

AMD FreeSync and Nvidia G-Sync are also available for use with laptop displays. Unsurprisingly, laptops that have a compatible display will be configured to use AMD FreeSync or Nvidia G-Sync from the factory.

A note of caution, however: not all laptops with AMD or Nvidia graphics hardware have a display with Adaptive Sync support. Even some gaming laptops lack this feature. Pay close attention to the specifications.

VESA’s Adaptive Sync is on its way to being the common adaptive sync standard used by the entire display industry. Though not perfect, it’s good enough for most situations, and display companies don’t have to fool around with AMD or Nvidia to support it.

That leaves AMD FreeSync and Nvidia G-Sync searching for a purpose. AMD FreeSync and Nvidia G-Sync Compatible are essentially certification programs that monitor companies can use to slap another badge on a product, though they also ensure out-of-the-box compatibility with supported graphics card. Nvidia’s G-Sync and G-Sync Ultimate are technically superior, but require proprietary Nvidia hardware that adds to a display’s price. This is why G-Sync and G-Sync Ultimate monitors are becoming less common.

{"backgroundColor":"#e6f4fa","sideMsg":{"t_id":"","language":{"en_us":"","en":""},"id":""},"data":[{"bannerInfo":{"t_id":"Pageebdb498a-0d52-43ad-938c-7b2629f1c2a0","language":{"en_us":"%3Cp%3ESave%20up%20to%20%7BsavingPercent%7D%20during%20the%20End%20of%20Year%20Clearance%20Sale.%20Earn%203%25-9%25%20in%20rewards%20when%20you%20join%20MyLenovo%20Rewards%20for%20free.%26nbsp%3B%3Ca%20href%3D%22%2Fd%2Fdeals%2Fclearance-sale%2F%3FIPromoID%3DLEN944203%22%20target%3D%22_self%22%20textvalue%3D%22Shop%20Now%20%26gt%3B%22%3E%3Cstrong%3EShop%20Now%20%26gt%3B%3C%2Fstrong%3E%3C%2Fa%3E%3C%2Fp%3E","en":""},"id":"Pageebdb498a-0d52-43ad-938c-7b2629f1c2a0"}},{"bannerInfo":{"t_id":"Pagef2bba5f6-b074-4cee-ba72-ab8191f1b607","language":{"en_us":"%3Cp%3EFree%20shipping%20sitewide%2C%20no%20minimum.%20MyLenovo%20Rewards%20members%20receive%20free%20expedited%20delivery*%20with%20their%20free%20membership.%3C%2Fp%3E","en":""},"id":"Pagef2bba5f6-b074-4cee-ba72-ab8191f1b607"}},{"bannerInfo":{"t_id":"Page804c8058-5b71-4447-ac57-24caa7c9a50b","language":{"en_us":"%3Cp%3ENeed%20it%20today%3F%20Buy%20online%2C%20pick%20up%20select%20products%20at%20Best%20Buy.%26nbsp%3B%3Ca%20href%3D%22about%3Ablank%22%20rel%3D%22noopener%20noreferrer%22%20target%3D%22_blank%22%3E%3C%2Fa%3E%3Ca%20href%3D%22%2Fd%2Fbopis%2F%3FIPromoID%3DLEN775727%22%20target%3D%22_self%22%3E%3Cstrong%3EShop%20Pick%20Up%20%26gt%3B%3C%2Fstrong%3E%3C%2Fa%3E%3C%2Fp%3E","en":""},"id":"Page804c8058-5b71-4447-ac57-24caa7c9a50b"}},{"bannerInfo":{"t_id":"Pagec7b22a93-955d-40a8-914d-2ce1aae0caee","language":{"en_us":"%3Cp%3EBad%20credit%20or%20no%20credit%3F%20No%20problem!%20Katapult%20offers%20a%20simple%20lease%20to%20own%20payment%20option%20to%20help%20get%20what%20you%20need.%20%3Ca%20href%3D%22%2Flandingpage%2Flenovo-financing-options%2F%3FIPromoID%3DLEN771093%22%20target%3D%22_self%22%3E%3Cstrong%3ESee%20if%20you%20Prequalify%20%26gt%3B%3C%2Fstrong%3E%3C%2Fa%3E%3C%2Fp%3E","en":""},"id":"Pagec7b22a93-955d-40a8-914d-2ce1aae0caee"}}],"autoRun":true}

Continue reading to learn about how Adaptive Sync prevents screen tearing and game stuttering for the smoothest gameplay possible. Or discover ViewSonic ELITE’s range of professional gaming monitors equipped with the latest sync capabilities.

However, no matter how advanced the specifications are, the monitor’s refresh rate and the graphics card’s frame rate need to be synced. Without the synchronization, gamers will experience a poor gaming experience marred with tears and judders. Manufacturers such as NVIDIA, AMD, and VESA have developed different display technologies that help sync frame rates and refresh rates to eliminate screen tearing and minimize game stuttering. And one such technology is Adaptive Sync.

Traditional monitors tend to refresh their images at a fixed rate. However, when a game requires higher frame rates outside of the set range, especially during fast-motion scenes, the monitor might not be able to keep up with the dramatic increase. The monitor will then show a part of one frame and the next frame at the same time.

As an example, imagine that your game is going at 90 FPS (Frames Per Second), but your monitor’s refresh rate is 60Hz, this means your graphics card is doing 90 updates per second with the display only doing 60. This overlap leads to split images – almost like a tear across the screen. These lines will take the beautiful viewing experience away and hamper any gameplay.

In every gameplay, different scenes demand varying levels of framerates. The more effects and details the scene has (such as explosions and smoke), the longer it takes to render the variance in framerate. Instead of consistently rendering the same framerate across all scenes, whether they are graphics-intensive or not, it makes more sense to sync the refresh rate accordingly.

Developed by VESA, Adaptive Sync adjusts the display’s refresh rate to match the GPU’s outputting frames on the fly. Every single frame is displayed as soon as possible to prevent input lag and not repeated, thus avoiding game stuttering and screen tearing.

Outside of gaming, Adaptive Sync can also be used to enable seamless video playback at various framerates, whether from 23.98 to 60 fps. It changes the monitor’s refresh rate to match with the framerate of the video content, thus banishing video stutters and even reducing power consumption.

Unlike V-Sync which caps your GPU’s frame rate to match with your display’s refresh rate, Adaptive Sync dynamically changes the monitor’s refresh rate in response to the game’s required framerates to render. This means it does not only annihilate screen tearing but also addresses the juddering effect that V-Sync causes when the FPS falls.

To illustrate Adaptive Sync with a diagram explained by VESA, you will see that Display A will wait till Render B is completed and ready before updating to Display B. This ensures that each frame is displayed as soon as possible, thus reducing the possibility of input lag. Frames will not be repeated within the display’s refresh rate set to avoid game stuttering. It will adapt the refresh rate to the rendering framerate to avoid any screen tearing.

NVIDIA G-Sync uses the same principle as Adaptive Sync. But it relies on proprietary hardware that must be built into the display. With the additional hardware and strict regulations enforced by NVIDIA, monitors supporting G-Sync have tighter quality control and are more premium in price.

Both solutions are also hardware bound. If you own a monitor equipped with G-Sync, you will need to get an NVIDIA graphics card. Likewise, a FreeSync display will require an AMD graphics card. However, AMD has also released the technology for open use as part of the DisplayPort interface. This allows anyone can enjoy FreeSync on competing devices. There are also G-Sync Compatible monitors available in the market to pair with an NVIDIA GPU.

Last year we wrote about NVIDIA G-SYNC, the revolutionary dynamic synchronization technology which synchronizes the monitor refresh rate with the refresh rate of the GPU in order to get rid of so-called “screen tearing” and other artifacts like stutter and input lag.

As we already know, NVIDIA’s G-SYNC requires a special module that gets embedded into the control board of the display panel and manages the synchronization between the graphics card and the monitor. The advantage of this approach is that the technology relies only on the G-SYNC module inside the monitor and is independent from the GPU’s integrated display controller, which makes it compatible with older graphics cards. The downside of this is the additional hardware (G-SYNC module), which is not cheap. NVIDIA also requires that the monitor manufacturers pay a licensing fee in order to integrate this technology in their monitors, which also adds to the cost.

AMD’s FreeSync approach is different. It is based on the Adaptive Sync open standard that’s already embedded into DisplayPort and, in addition, on the integrated display controller inside AMD’s GPUs. FreeSync supports a wider range of FPS – 9 to 240 Hz against NVIDIA’s 30-144Hz. Of course, the implementation of such support is entirely up to the monitor manufacturer – they can make monitors with any range in mind that falls between 9Hz-240Hz. Another important advantage of FreeSync is the ability to support the standard monitor features and that won’t influence the gaming process performance. Not to mention that Displayport supports transmission of audio in parallel with video, whereas the G-SYNC monitors only support DisplayPort outputs and do not allow for anything other than the most basic monitor features with the G-SYNC module supporting very preliminary color processing and without supporting any audio output.

The bottleneck of the FreeSync technology is that it is compatible only with the most recent AMD GPUs and does not support the previous generations of their graphics cards. It may be seen as a potential problem for users who would have to invest into a newer graphics card, but AMD says that all future GPUs from the company will support the technology anyway.

Both FreeSync and G-SYNC function pretty much the same when your game’s FPS falls in the variable refresh rate range of the monitor and apart from a very minor (~2%) performance penalty with G-SYNC, both technologies would be almost the same when inside the variable refresh window. However, if a game’s FPS falls down below the minimum refresh rate of the monitor G-SYNC and FreeSync handle the situation quite differently.

Earlier versions of monitors with FreeSync would revert back to a fixed refresh rate that matches the lowest refresh rate that it is capable of. However, user will be able to choose manually whether he wants to enable V-Sync in order to avoid tearing (in exchange for input latency, though) or continue to play without V-Sync and get screen tearing.

G-SYNC deals with the situation in pretty much the same way, with the only difference being that the monitor will revert back to a maximum fixed refresh rate, which also will result in an increase of input lag, but not as severe as FreeSync. What is disappointing with G-SYNC in this case is that it doesn’t give the user an opportunity to switch V-Sync on or off – it would always stay on and will not allow users to get rid of that latency in exchange for screen tearing. Nevertheless, having a monitor fixed at the maximum refresh rate is still a better solution rather than at the minimum.

In 2015, AMD has officially announced that they made an effort to update the drivers in order to enable FreeSync behavior at the maximum refresh rates when the FPS drops below the FreeSync operating range, which, basically, eliminated that difference with NVIDIA’s G-SYNC.

As we can see, G-SYNC and FreeSync do not have any critical differences between each other. Both solutions are great in providing a smooth image when playing games on the PC and both have their pros and cons. So, there is no simple answer to a question “Who is the best?”

Users note having FreeSync enabled reduces tearing and stuttering, but some monitors exhibit another problem: Ghosting. As objects move on the screen, they leave shadowy images of their last position. It’s an artifact that some people don’t notice at all, but it annoys others.

Many fingers point at what might cause it, but the physical reason for it is power management. If you don’t apply enough power to the pixels, your image will have gaps in it — too much power, and you’ll see ghosting. Balancing the adaptive refresh technology with proper power distribution is hard.

Both FreeSync and G-Sync also suffer when the frame rate isn’t consistently syncing within the monitor’s refresh range. G-Sync can show problems with flickering at very low frame rates, and while the technology usuallycompensates to fix it, there are exceptions. FreeSync, meanwhile, has stuttering problems if the frame rate drops below a monitor’s stated minimum refresh rate. Some FreeSync monitors have an extremely narrow adaptive refresh range, and if your video card can’t deliver frames within that range, problems arise.

Most reviewers who’ve compared the two side-by-side seem to prefer the quality of G-Sync, which does not show stutter issues at low frame rates and is thus smoother in real-world situations. It’s also important to note that upgrades to syncing technology (and GPUs) are slowly improving these problems for both technologies.

One of the first differences you’ll hear people talk about with adaptive refresh technology, besides the general rivalry between AMD and Nvidia, is the difference between a closed and an open standard. While G-Sync is proprietary Nvidia technology and requires the company’s permission and cooperation to use, FreeSync is free for any developer or manufacturer to use. Thus, there are more monitors available with FreeSync support.

You won’t end up paying much extra for a monitor with FreeSync. There’s no premium for the manufacturer to include it, unlike G-Sync. FreeSync in the mid-hundreds frequently comes with a 1440p display and a 144Hz refresh rate (where their G-Sync counterparts might not), and monitors without those features can run as low as $160.

FreeSync Premium Pro: Previously known as FreeSeync 2 HDR, this premium version of FreeSync is specifically designed for HDR content, and if monitors support it, then they must guarantee at least 400 nits of brightness for HDR, along with all the benefits found with FreeSync Premium.

Nvidia’s G-Sync options are tiered, with G-Sync compatible at the bottom, offering basic G-Sync functionality in monitors that aren’t designed with G-Sync in mind (some Freesync monitors meet its minimum requirements). G-Sync is the next option up, with the most capable of monitors given G-Sync Ultimate status:

The G-Sync from Nvidia and the Freestyle feature from AMD both come with amazing features that can improve your game levels. Personally, when you compare the two, the G-Sync monitors come with a feature list that is slightly better, especially for the products rated at the G-Sync Ultimate level. That said, the difference between the two isn’t so great that you should never buy a Freesync monitor. Indeed, if you already have a decent graphics card, then buying a monitor to go with your GPU makes the most sense (side note for console owners: Xbox Series X supports FreeSync and PS5 is expected to support it in the future, but neither offer G-Sync support).

If you need to save a few bucks, FreeSync monitors and FreeSync-supported GPUs cost a bit less, on average. For example, the AMD Radeon RX 590 graphics card costs around $200. That said, all of the power-packed graphics cards were pretty difficult to find in the early part of 2021. It may be best to wait a few months and then buy a new RX 6000 card for a more budget-friendly price instead of buying MSRP right now.

But what is G-Sync tech? For the uninitiated, G-Sync is Nvidia"s name for its frame synchronization technology. It makes use of dedicated silicon in the monitor so it can match your GPU"s output to your gaming monitor"s refresh rate, for the smoothest gaming experience. It removes a whole load of guesswork in getting the display settings right, especially if you have an older GPU. The catch is that the tech only works with Nvidia GPUs.

Here"s where things might get a little complicated: G-Sync features do work with AMD"s adaptive FreeSync tech monitors, but not the other way around. If you have an AMD graphics card, you"ll for sure want to check out the best FreeSync monitors(opens in new tab) along with checking our overall best gaming monitors(opens in new tab) for any budget.

The PG32UQX is easily one of the best panels I"ve used to date. The colors are punchy yet accurate and that insane brightness earns the PG32UQX the auspicious DisplayHDR 1400 certification. However, since these are LED zones and not self-lit pixels like an OLED, you won"t get those insane blacks for infinite contrast.

Mini-LED monitors do offer full-array local dimming (FALD) for precise backlight control, though. What that means for the picture we see is extreme contrast from impressive blacks to extremely bright DisplayHDR 1400 spec.If you want to brag with the best G-Sync gaming monitor around, this is the way to do it.

Beyond brightness, you can also expect color range to boast about. The colors burst with life and the dark hides ominous foes for you to slay in your quest for the newest loot.

That rapid 144Hz refresh rate is accompanied by HDMI 2.0 and DisplayPort 1.4 ports, along with two USB 3.1 ports join the action, with a further USB 2.0 sitting on the top of the monitor to connect your webcam.

As for its G-Sync credentials, the ROG Swift delivers G-Sync Ultimate, which is everything a dedicated G-Sync chip can offer in terms of silky smooth performance and support for HDR. So if you want to brag with the best G-Sync gaming monitor around, this is the way to do it. However, scroll on for some more realistic recommendations in terms of price.

OLED has truly arrived on PC, and in ultrawide format no less. Alienware"s 34 QD-OLED is one of very few gaming monitors to receive such a stellar score from us, and it"s no surprise. Dell has nailed the OLED panel in this screen and it"s absolutely gorgeous for PC gaming. Although this monitor isn’t perfect, it is dramatically better than any LCD-based monitor by several gaming-critical metrics. And it’s a genuine thrill to use.

What that 34-inch, 21:9 panel can deliver in either of its HDR modes—HDR 400 True Black or HDR Peak 1000—is nothing short of exceptional. The 3440 x 1440 native resolution image it produces across that gentle 1800R curve is punchy and vibrant. With 99.3% coverage of the demanding DCI-P3 colour space, and fully 1,000 nits brightness, it makes a good go, though that brightness level can only be achieved on a small portion of the panel.

Still, there’s so much depth, saturation and clarity to the in-game image thanks to that per-pixel lighting, but this OLED screen needs to be in HDR mode to do its thing. And that applies to SDR content, too. HDR Peak 1000 mode enables that maximum 1,000 nit performance in small areas of the panel but actually looks less vibrant and punchy most of the time.The Alienware 34 QD-OLED"s response time is absurdly quick at 0.1ms.

Burn-in is the great fear and that leads to a few quirks. For starters, you’ll occasionally notice the entire image shifting by a pixel or two. The panel is actually overprovisioned with pixels by about 20 in both axes, providing plenty of leeway. It’s a little like the overprovisioning of memory cells in an SSD and it allows Alienware to prevent static elements from “burning” into the display over time.

Latency is also traditionally a weak point for OLED, and while we didn’t sense any subjective issue with this 175Hz monitor, there’s little doubt that if your gaming fun and success hinges on having the lowest possible latency, there are faster screens available. You can only achieve the full 175Hz with the single DisplayPort input, too.

The Alienware 34 QD-OLED"s response time is absurdly quick at 0.1ms, and it cruised through our monitor testing suite. You really notice that speed in-game, too.

There"s no HDMI 2.1 on this panel, however. So it"s probably not the best fit for console gaming as a result. But this is PC Gamer, and if you"re going to hook your PC up to a high-end gaming monitor, we recommend it be this one.

4K gaming is a premium endeavor. You need a colossal amount of rendering power to hit decent frame rates at such a high resolution. But if you"re rocking a top-shelf graphics card, like an RTX 3080(opens in new tab), RTX 3090(opens in new tab), or RX 6800 XT(opens in new tab) then this dream can be a reality, at last. While the LG 27GN950-B is a fantastic gaming panel, it"s also infuriatingly flawed.

The LG UltraGear is the first 4K, Nano IPS, gaming monitor with 1ms response times, that"ll properly show off your superpowered GPU. Coming in with Nvidia G-Sync and AMD’s FreeSync adaptive refresh compatibility, this slick slim-bezel design even offers LG’s Sphere Lighting 2.0 RGB visual theatrics.

And combined with the crazy-sharp detail that comes with the 4K pixel grid, that buttery smooth 144Hz is pretty special.The color fidelity of the NanoIPS panel is outstanding.

While it does suffer with a little characteristic IPS glow. It appears mostly at the screen extremities when you’re spying darker game scenes, but isn"t an issue most of the time. The HDR is a little disappointing as, frankly, 16 edge-lit local dimming zones do not a true HDR panel make.

What is most impressive, however, is the Nano IPS tech that offers a wider color gamut and stellar viewing angles. And the color fidelity of the NanoIPS panel is outstanding.

The LG UltraGear 27GN950-B bags you a terrific panel with exquisite IPS image quality. Despite the lesser HDR capabilities, it also nets beautiful colors and contrast for your games too. G-Sync offers stable pictures and smoothness, and the speedy refresh rate and response times back this up too.

That makes this a versatile piece of kit, and that 3840 x 2160 resolution is enough to prevent any pixelation across this generous, 32-inch screen. The 16:9 panel doesn"t curve, but does offer a professional-level, sub 1ms grey-to-grey (GTG) response rate.

Still, the MSI Optix MPG321UR does come with a 600nit peak brightness, and Vesa HDR 600 certification, alongside 97% DCI-P3 colour reproduction capabilities. All this goes toward an amazingly vibrant screen that"s almost accurate enough to be used for professional colour grading purposes.

The Optix is one of MSI"s more recent flagship models, so you know you"re getting serious quality and performance. Its panel looks gorgeous, even at high speeds, managing a 1ms GTG response time.

Though MSI"s Optix is missing a physical G-Sync chip, it"ll still run nicely with any modern Nvidia GPU, or AMD card if you happen to have one of those lying around.

The Xeneon is Corsair"s attempt at breaking into the gaming monitor market. To do that, the company has opted for 32 inches of IPS panel at 1440p resolution. Once again we"re looking at a FreeSync Premium monitor that has been certified to work with GeForce cards by Nvidia.

It pretty much nails the sweetspot for real-world gaming, what with 4K generating such immense levels of GPU load and ultrawide monitors coming with their own set of limitations.

The 2,560 by 1,440 pixel native resolution combined with the 32-inch 16:9 aspect panel proportions translate into sub-100DPI pixel density. That’s not necessarily a major problem in-game. But it does make for chunky pixels in a broader computing context.It‘s punchy, vibrant, and well-calibrated.

That sub-3ms response, combined with a 165Hz refresh, means the thing isn"t a slouch when it comes to gaming capability, though there are certainly more impressive gaming monitors out there.

The two HDMI 2.0 sockets are limited to 144Hz, and the DisplayPort 1.4 interface is predictable enough. But the USB Type-C with power delivery for a single cable connection with charging to a laptop is a nice extra. Or, at least, it would be if the charging power wasn’t limited to a mere 15W, which is barely enough for something like a MacBook Air, let alone a gaming laptop.

The core image quality is certainly good, though. It‘s punchy, vibrant, and well-calibrated. And while it"s quite pricey for a 1440p model, it delivers all it sets out to with aplomb. On the whole, the Corsair Xeneon 32QHD165 doesn’t truly excel at anything, but it"s still a worthy consideration in 2022.

The XB273K’s gaming pedigree is obvious the second you unbox it: it is a 27-inch, G-Sync compatible, IPS screen, that boasts a 4ms gray-to-gray response rate, and a 144Hz refresh rate. While that may not sound like a heck of a lot compared to some of today"s monitors, it also means you can bag it for a little less.

Assassin’s Creed Odyssey looked glorious. This monitor gave up an incredibly vivid showing, and has the crispest of image qualities to boot; no blurred or smudged edges to see and each feature looks almost perfectly defined and graphically identified.The contrasts are particularly strong with any colors punching through the greys and blacks.

The contrasts are particularly strong with any colors punching through the greys and blacks. However, the smaller details here are equally good, down to clothing detail, skin tone and complexion, and facial expressions once again. There is an immersion-heightening quality to the blacks and grays of the Metro and those games certainly don’t feel five years old on the XB273K.

The Predator XB273K is one for those who want everything now and want to future-proof themselves in the years ahead. It might not have the same HDR heights that its predecessor, the X27, had, but it offers everything else for a much-reduced price tag. Therefore, the value it provides is incredible, even if it is still a rather sizeable investment.

Out of the box, it looks identical to the old G9. Deep inside, however, the original G9’s single most obvious shortcoming has been addressed. And then some. The Neo G9 still has a fantastic VA panel. But its new backlight doesn’t just have full-array rather than edge-lit dimming.

It packs a cutting-edge mini-LED tech with no fewer than 2,048 zones. This thing is several orders of magnitude more sophisticated than before. As if that wasn’t enough, the Neo G9’s peak brightness has doubled to a retina-wrecking 2,000 nits. What a beast.

The problem with any backlight-based rather than per-pixel local dimming technology is that compromises have to be made. Put another way, an algorithm has to decide how bright any given zone should be based on the image data. The results are never going to be perfect.

In practice, the Neo G9’s mini-LED creates as many problems as it solves. We also can’t help but observe that, at this price point, you have so many options. The most obvious alternative, perhaps, is a large-format 120Hz OLED TV with HDMI 2.1 connectivity.

G-Sync gaming monitor FAQWhat is the difference in G-Sync and G-Sync Compatible?G-Sync and G-Sync Ultimate monitors come with a bespoke G-Sync processor, which enables a full variable refresh rate range and variable overdrive. G-Sync Compatible monitors don"t come with this chip, and that means they may have a more restricted variable refresh rate range.

Fundamentally, though, all G-Sync capable monitors offer a smoother gaming experience than those without any frame-syncing tech.Should I go for a FreeSync or G-Sync monitor?In general, FreeSync monitors will be cheaper. It used to be the case that they would only work in combination with an AMD GPU. The same went for G-Sync monitors and Nvidia GPUs. Nowadays, though, it is possible to find G-Sync compatible FreeSync monitors(opens in new tab) if you"re intent on spending less.Should I go for an IPS, TN or VA panel?We would always recommend an IPS panel over TN(opens in new tab). The clarity of image, viewing angle, and color reproduction are far superior to the cheaper technology, but you"ll often find a faster TN for cheaper. The other alternative, less expensive than IPS and better than TN, is VA tech. The colors aren"t quite so hot, but the contrast performance is impressive.

Graphics tech synchronizes a game"s framerate with your monitor"s refresh rate to help prevent screen tearing by syncing your GPU frame rate to the display"s maximum refresh rate. Turn V-Sync on in your games for a smoother experience, but you"ll lose information, so turn it off for fast-paced shooters (and live with the tearing). Useful if you have an older model display that can"t keep up with a new GPU.

G-SyncNvidia"s frame synching tech that works with Nvidia GPUs. It basically allows the monitor to sync up with the GPU. It does by showing a new frame as soon as the GPU has one ready.

AMD"s take on frame synching uses a similar technique as G-Sync, with the biggest difference being that it uses DisplayPort"s Adaptive-Sync technology which doesn"t cost monitor manufacturers anything.

TN PanelsTwisted-nematic is the most common (and cheapest) gaming panel. TN panels tend to have poorer viewing angles and color reproduction but have higher refresh rates and response times.

IPSIn-plane switching, panels offer the best contrast and color despite having weaker blacks. IPS panels tend to be more expensive and have higher response times.

VAVertical Alignment panels provide good viewing angles and have better contrast than even IPS but are still slower than TN panels. They are often a compromise between a TN and IPS panel.

HDRHigh Dynamic Range. HDR provides a wider color range than normal SDR panels and offers increased brightness. The result is more vivid colors, deeper blacks, and a brighter picture.

Ms.Josey

Ms.Josey

Ms.Josey

Ms.Josey